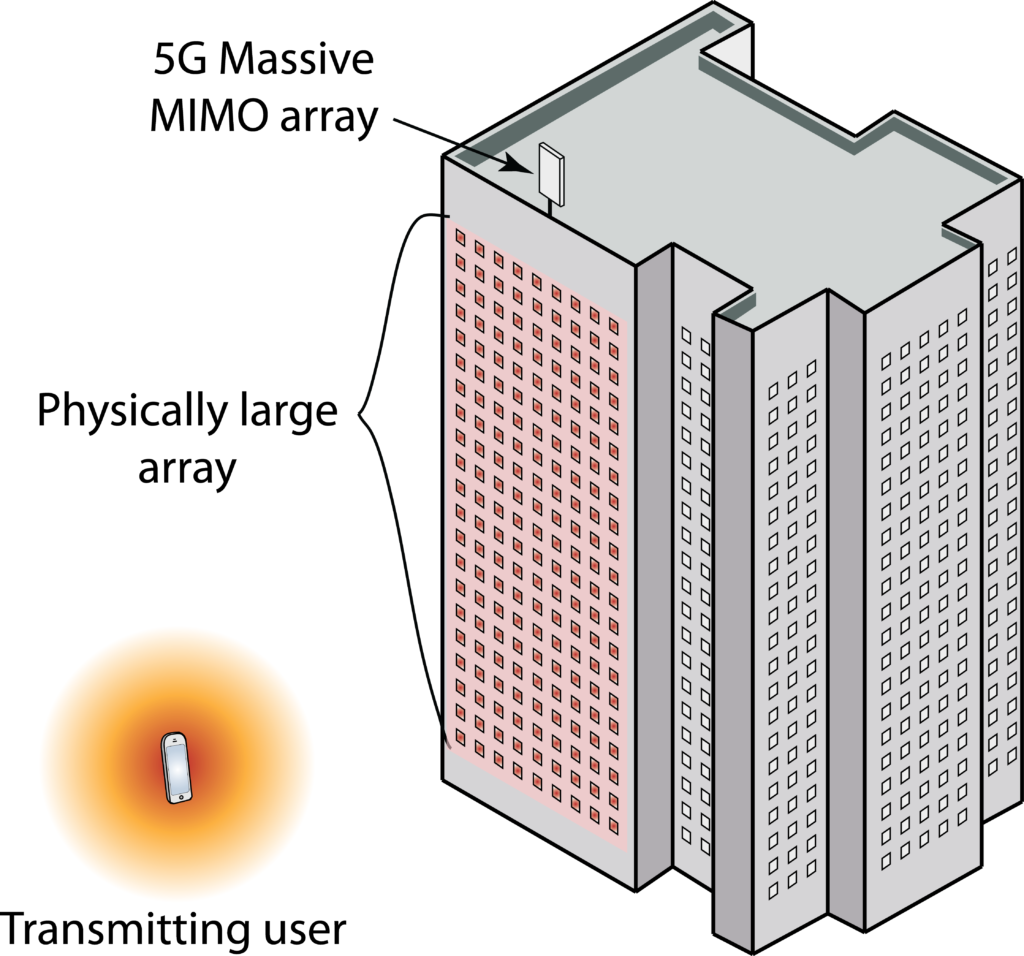

I have written several posts about Massive MIMO field trials during this year. A question that I often get in the comment field is: Have the industry built “real” reciprocity-based Massive MIMO systems, similar to what is described in my textbook, or is something different under the hood? My answer used to be “I don’t know” since the press releases are not providing such technical details.

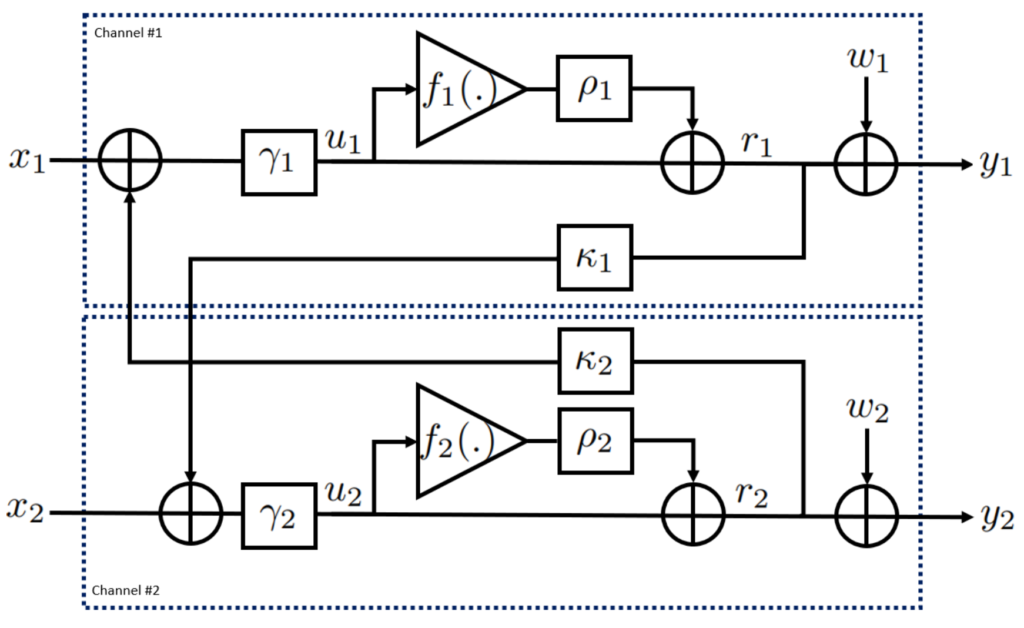

The 5G standard supports many different modes of operation. When it comes to spatially multiplexing of users in the downlink, the way to configure the multi-user beamforming is of critical importance to control the inter-user interference. There are two main ways of doing that.

The first option is to let the users transmit pilot signals in the uplink and exploit the reciprocity between uplink and downlink to identify good downlink beams. This is the preferred operation from a theoretical perspective; if the base station has 64 transceivers, a single uplink pilot is enough to estimate the entire 64-dimensional channel. In 5G, the pilot signals that can be used for this purpose are called Sounding Reference Signals (SRS). The base station uses the uplink pilots from multiple users to select the downlink beamforming. This is the option that resembles what the textbooks on Massive MIMO are describing as the canonical form of the technology.

The second option is to let the base station transmit a set of downlink signals using different beams. The user device then reports back some measurement values describing how good the different downlink beams were. In 5G, the corresponding downlink signals are called Channel State Information Reference Signal (CSI-RS). The base station uses the feedback to select the downlink beamforming. The drawback of this approach is that 64 downlink signals must be transmitted to explore all 64 dimensions, so one might have to neglect many dimensions to limit the signaling overhead. Moreover, the resolution of the feedback from the users is limited.

In practice, the CSI-RS operation might be easier to implement, but the lower resolution in the beamforming selection will increase the interference between the users and ultimately limit how many users and layers per user that can be spatially multiplexed to increase the throughput.

New field trial based on SRS

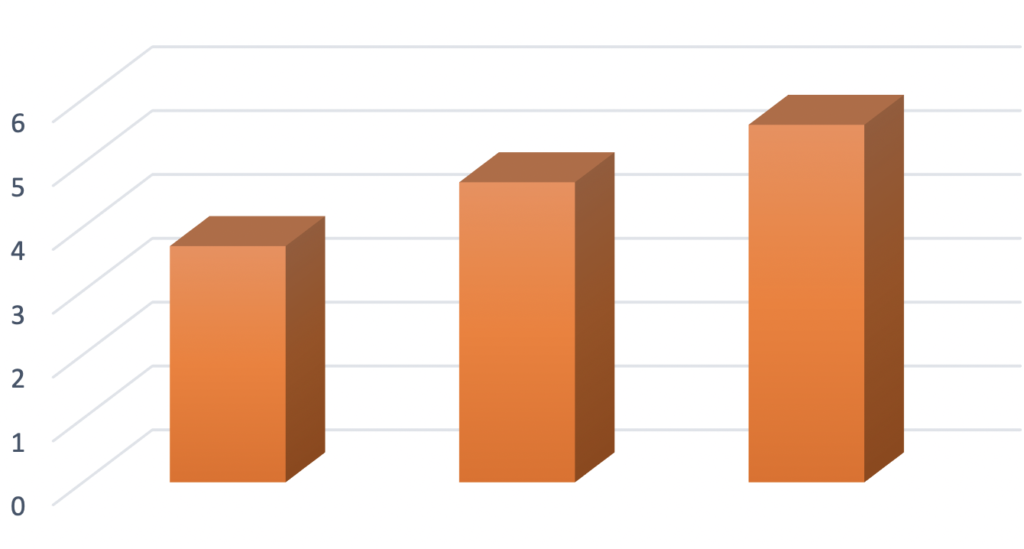

The Signal Research Group has carried out a new field trial in Plano, Texas. The unique thing is that they confirm that the SRS operation was used. They utilized hardware and software from Ericsson, Accuver Americas, Rohde & Schwarz, and Gemtek. A 100 MHz channel bandwidth in the 3.5 GHz band was considered, the downlink power was 120 W, and a peak throughput of 5.45 Gbps was achieved. 8 user devices received two layers each, thus, the equipment performed spatial multiplexing of 16 layers. The setup was a suburban outdoor cell with inter-cell interference and a one-kilometer range. The average throughput per device was around 650 Mbps and was not much affected when the number of users increased from one to eight, which demonstrates that the beamforming could effectively deal with the interference.

It is great to see that “real” reciprocity-based Massive MIMO provides such great performance in practice. In the report that describes the measurements, the Signal Research Group states that not all 5G devices support the SRS-based mode. They had to look for the right equipment to carry out the experiments. Moreover, they point out that:

“Operators with mid-band 5G NR spectrum (2.5 GHz and higher) will start deploying MU-MIMO, based on CSI-RS, later this year to increase spectral efficiency as their networks become loaded. The SRS variant of MU-MIMO will follow in the next six to twelve months, depending on market requirements and vendor support.“

The following video describes the measurements in further detail: