Conventional mobile networks (a.k.a. cellular wireless networks) are based on cellular topologies. With cellular topologies, a land area is divided into cells. Each cell is served by one base station. An interesting question is: shall the future mobile networks continue to have cells? My quick answer is no, cell-free networks should be the way to do in the future!

Future wireless networks have to manage at the same time billions of devices; each needs a high throughput to support many applications such as voice, real-time video, high quality movies, etc. Cellular networks could not handle such huge connections since user terminals at the cell boundary suffer from very high interference, and hence, perform badly. Furthermore, conventional cellular systems are designed mainly for human users. In future wireless networks, machine-type communications such as the Internet of Things, Internet of Everything, Smart X, etc. are expected to play an important role. The main challenge of machine-type communications is scalable and efficient connectivity for billions of devices. Centralized technology with cellular topologies does not seem to be working for such scenarios since each cell can cover a limited number of user terminals. So why not cell-free structures with decentralized technology? Of course, to serve many user terminals and to simplify the signal processing in a distributed manner, massive MIMO technology should be included. The combination between cell-free structure and massive MIMO technology yields the new concept: Cell-Free Massive MIMO.

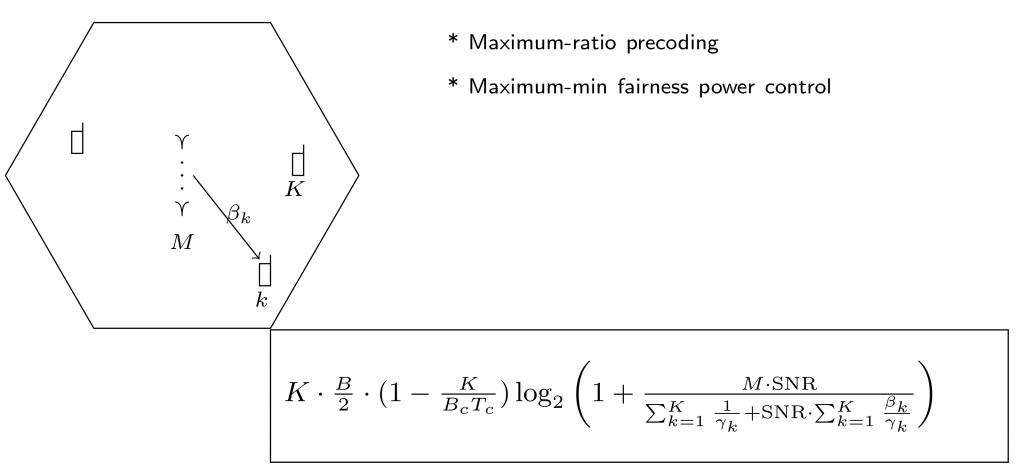

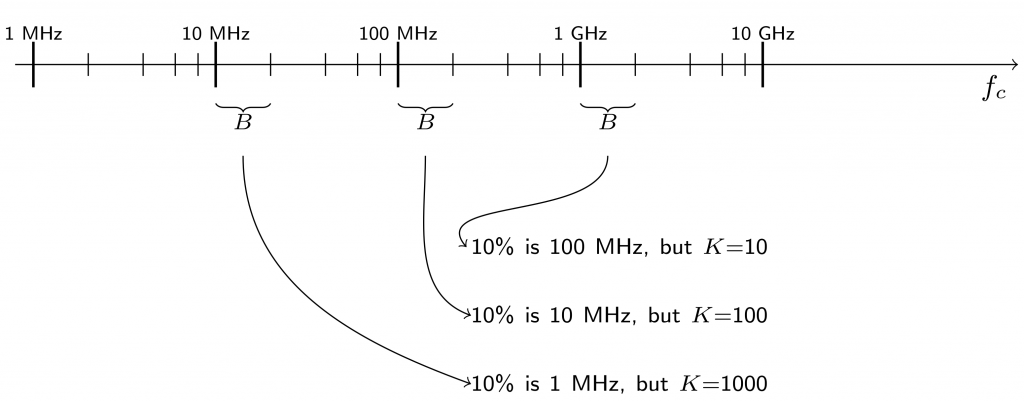

What is Cell-Free Massive MIMO? Cell-Free Massive MIMO is a system where a massive number access points distributed over a large area coherently serve a massive number of user terminals in the same time/frequency band. Cell-Free Massive MIMO focuses on cellular frequencies. However, millimeter wave bands can be used as a combination with the cellular frequency bands. There are no concepts of cells or cell boundaries here. Of course, specific signal processing is used, see [1] for more details. Cell-Free Massive MIMO is a new concept. It is a new practical, useful, and scalable version of network MIMO (or cooperative multipoint joint processing) [2, 3]. To some extent, Massive MIMO technology based on the favorable propagation and channel hardening properties is used in Cell-Free Massive MIMO.

Cell-Free Massive MIMO is different from distributed Massive MIMO [4]. Both systems use many service antennas in a distributed way to serve many user terminals, but they are not entirely the same. With distributed Massive MIMO, the base station antennas are distributed within each cell, and these antennas only serve user terminals within that cell. By contrast, in Cell-Free Massive MIMO there are no cells. All service antennas coherently serve all user terminals. The figure below compares the structures of Cell-Free Massive MIMO and distributed Massive MIMO.

|

|

| Distributed Massive MIMO |

Cell-Free Massive MIMO |

[1] H. Q. Ngo, A. Ashikhmin, H. Yang, E. G. Larsson, and T. L. Marzetta, “Cell-Free Massive MIMO versus Small Cells,” IEEE Trans. Wireless Commun., 2016 submitted for publication. Available: https://arxiv.org/abs/1602.08232

[2] G. Foschini, K. Karakayali, and R. A. Valenzuela, “Coordinating multiple antenna cellular networks to achieve enormous spectral efficiency,” IEE Proc. Commun. , vol. 152, pp. 548–555, Aug. 2006.

[3] E. Björnson, R. Zakhour, D. Gesbert, B. Ottersten, “Cooperative Multicell Precoding: Rate Region Characterization and Distributed Strategies with Instantaneous and Statistical CSI,” IEEE Trans. Signal Process., vol. 58, no. 8, pp. 4298-4310, Aug. 2010.

[4] K. T. Truong and R.W. Heath Jr., “The viability of distributed antennas for massive MIMO systems,” in Proc. Asilomar CSSC, 2013, pp. 1318–1323.

The 5G Myth is the provocative title of a recent book by

The 5G Myth is the provocative title of a recent book by