There are basically two approaches to achieve high data rates in 5G: One can make use of huge bandwidths in mmWave bands or use Massive MIMO to spatially multiplex many users in the conventional sub-6 GHz bands.

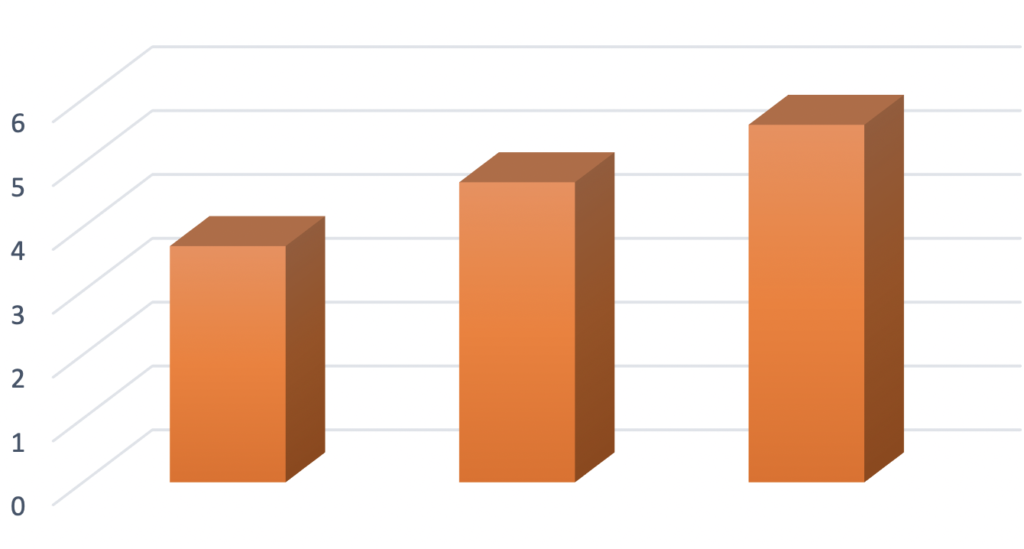

As I wrote back in June, I am more impressed by the latter approach since it is more spectrally efficient and requires more technically advanced signal processing. I was comparing the 4.7 Gbps that Nokia had demonstrated over an 840 MHz mmWave band with the 3.7 Gbps that Huawei had demonstrated over 100 MHz of the sub-6 GHz spectrum. The former example achieves a spectral efficiency of 5.6 bps/Hz while the latter achieves 37 bps/Hz.

T-Mobile and Ericsson recently described a new field trial with even more impressive results. They made use of 100 MHz in the 2.5 GHz band and achieved 5.6 Gbps, corresponding to a spectral efficiency of 56 bps/Hz; an order-of-magnitude more than one can expect in mmWave bands!

The press release describes that the high data rate was achieved using a 64-antenna base station, similar to the product that I described earlier. Eight smartphones were served by spatially multiplexing and each received two parallel data streams (so-called layers). Hence, each user device obtained around 700 Mbps. On average, each of the 16 layers had a spectral efficiency of 3.5 bps/Hz, thus 16-QAM was probably utilized in the transmission.

I think these numbers are representative of what 5G can deliver in good coverage conditions. Hopefully, Massive MIMO based 5G networks will soon be commercially available in your country as well.

I assume this was all downstream. I understand upstream – especially at the cell edge- is still challenging. More compute for channel estimates will be required in the panel.

Yes, I assume that, even if the pressrelease didn’t say it.

The uplink transmission will certainly have an SNR disadvantage since the base station can transmit at higher power than the smartphones.

But the number of channel estimates and how often they need to be computed should be the same in uplink and downlink.

Hello Professor Bjornson.

You think what are main factors that cause such an improvement in spectral efficiency with T-Mobile?

I can only speculate since there are too few details about the trials. If we assume that all the trials are made under good SNR conditions, it is the precoding implementation and its ability to suppress interference that makes the difference in the sub-6 GHz band.

This is good news but will spatial multiplexing work as efficiently when users are distributed such that it is not optimal to multiplex several to share time and frequency resources

No. It will be interesting to see the average performance with real users in the network.

It seems the SINR is quite high here, for all terminals.

Do we have any more details on the experiment?

Was there any mobility? How was CSI obtained, through reciprocity (assuming it was downlink)? Interference from other cells?

I haven’t seen any such information but Sebastian Faxér commented on LinkedIn that “Naturally, in order to get such spectral efficiency with MU-MIMO, inter-layer interference needs to be supressed at the transmitter side. This can be acheived using various linear precoding techniques such as R-ZF, SLNR, MMSE approaches, etc. In the end these all result in similar precoder expressions. What is more important is how to design robust algorithms to handle varying degrees of CSI accuracy. But yes, linear precoding techniques acheiving very precise nullforming is what is being used here to get these results.”

Interesting trial. Does this use Rel-16 codebook based precoding with Type 2 CSI feedback?

I don’t know, but I hope they are not using codebooks but instead exploit SRS and channel reciprocity to get the best out of Massive MIMO.