Many researchers have analyzed pilot contamination over the six years that have passed since Marzetta uncovered its importance in Massive MIMO systems. We now have a quite good understanding of how to mitigate pilot contamination. There is a plethora of different approaches, whereof many have complementary benefits. If pilot contamination is not mitigated, it will both reduce the array gain and create coherent interference. Some approaches mitigate the pilot interference in the channel estimation phase, while some approaches combat the coherent interference caused by pilot contamination. In this post, I will try to categorize the approaches and point to some key references.

Interference-rejecting precoding and combining

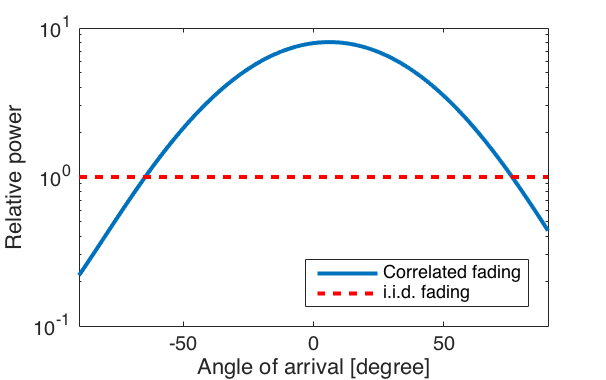

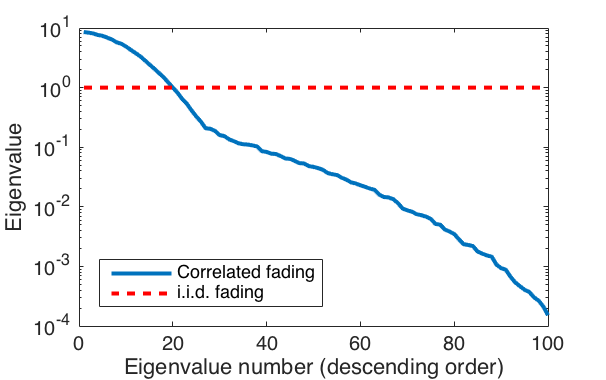

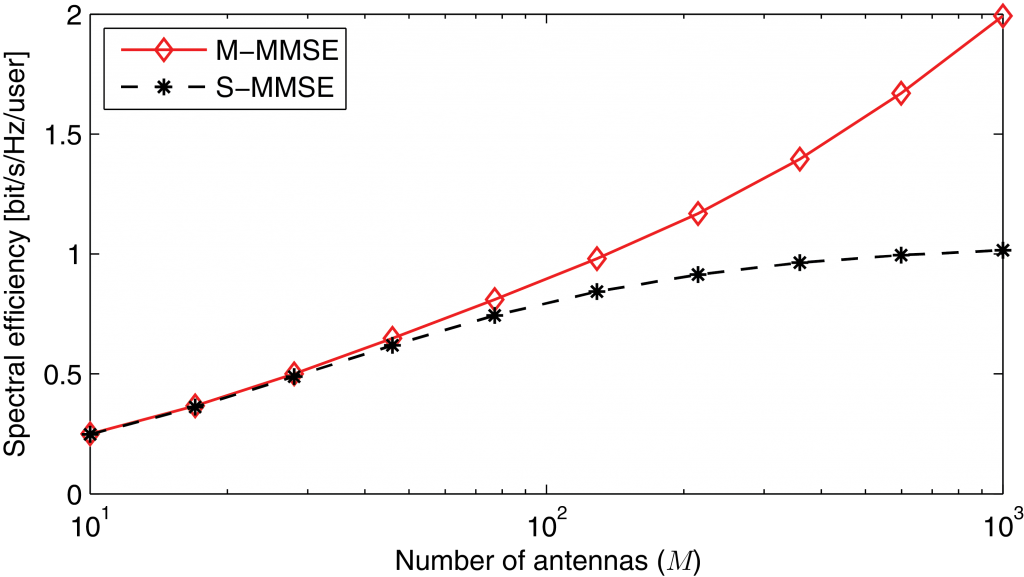

Pilot contamination makes the estimate of a desired channel correlated with the channel from pilot-sharing users in other cells. When these channel estimates are used for receive combining or transmit precoding, coherent interference typically arise. This is particularly the case if maximum ratio processing is used, because it ignores the interference. If multi-cell MMSE processing is used instead, the coherent interference is rejected in the spatial domain. In particular, recent work from Björnson et al. (see also this related paper) have shown that there is no asymptotic rate limit when using this approach, if there is just a tiny amount of spatial correlation in the channels.

Data-aided channel estimation

Another approach is to “decontaminate” the channel estimates from pilot contamination, by using the pilot sequence and the uplink data for joint channel estimation. This have the potential of both improving the estimation quality (leading to a stronger desired signal) and reducing the coherent interference. Ideally, if the data is known, data-aided channel estimation increase the length of the pilot sequences to the length of the uplink transmission block. Since the data is unknown to the receiver, semi-blind estimation techniques are needed to obtain the channel estimates. Ngo et al. and Müller et al. did early works on pilot decontamination for Massive MIMO. Recent work has proved that one can fully decontaminate the estimates, as the length of the uplink block grows large, but it remains to find the most efficient semi-blind decontamination approach for practical block lengths.

Pilot assignment and dimensioning

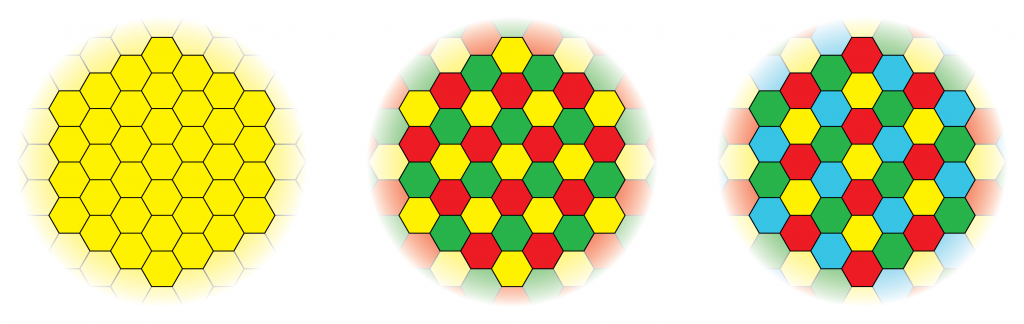

Which subset of users that share a pilot sequence makes a large difference, since users with large pathloss differences and different spatial channel correlation cause less contamination to each other. Recall that higher estimation quality both increases the gain of the desired signal and reduces the coherent interference. Increasing the number of orthogonal pilot sequences is a straightforward way to decrease the contamination, since each pilot can be assigned to fewer users in the network. The price to pay is a larger pilot overhead, but it seems that a reuse factor of 3 or 4 is often suitable from a sum rate perspective in cellular networks. The joint spatial division and multiplexing (JSDM) provides a basic methodology to take spatial correlation into account in the pilot reuse patterns.

Alternatively, pilot sequences can be superimposed on the data sequences, which gives as many orthogonal pilot sequences as the length of the uplink block and thereby reduces the pilot contamination. This approach also removes the pilot overhead, but it comes at the cost of causing interference between pilot and data transmissions. It is therefore important to assign the right fraction of power to pilots and data. A hybrid pilot solution, where some users have superimposed pilots and some have conventional pilots, may bring the best of both worlds.

If two cells use the same subset of pilots, the exact pilot-user assignment can make a large difference. Cell-center users are generally less sensitive to pilot contamination than cell-edge users, but finding the best assignment is a hard combinatorial problem. There are heuristic algorithms that can be used and also an optimization framework that can be used to evaluate such algorithms.

Multi-cell cooperation

A combination of network MIMO and macro diversity can be utilized to turn the coherent interference into desired signals. This approach is called pilot contamination precoding by Ashikhmin et al. and can be applied in both uplink and downlink. In the uplink, the base stations receive different linear combinations of the user signals. After maximum ratio combining, the coefficients in the linear combinations approach deterministic numbers as the number of antennas grow large. These numbers are only non-zero for the pilot-sharing users. Since the macro diversity naturally creates different linear combinations, the base stations can jointly solve a linear system of equations to obtain the transmitted signals. In the downlink, all signals are sent from all base stations and are precoded in such a way that the coherent interference sent from different base stations cancel out. While this is a beautiful approach for mitigating the coherent interference, it relies heavily on channel hardening, favorable propagation, and i.i.d. Rayleigh fading. It remains to be shown if the approach can provide performance gains under more practical conditions.