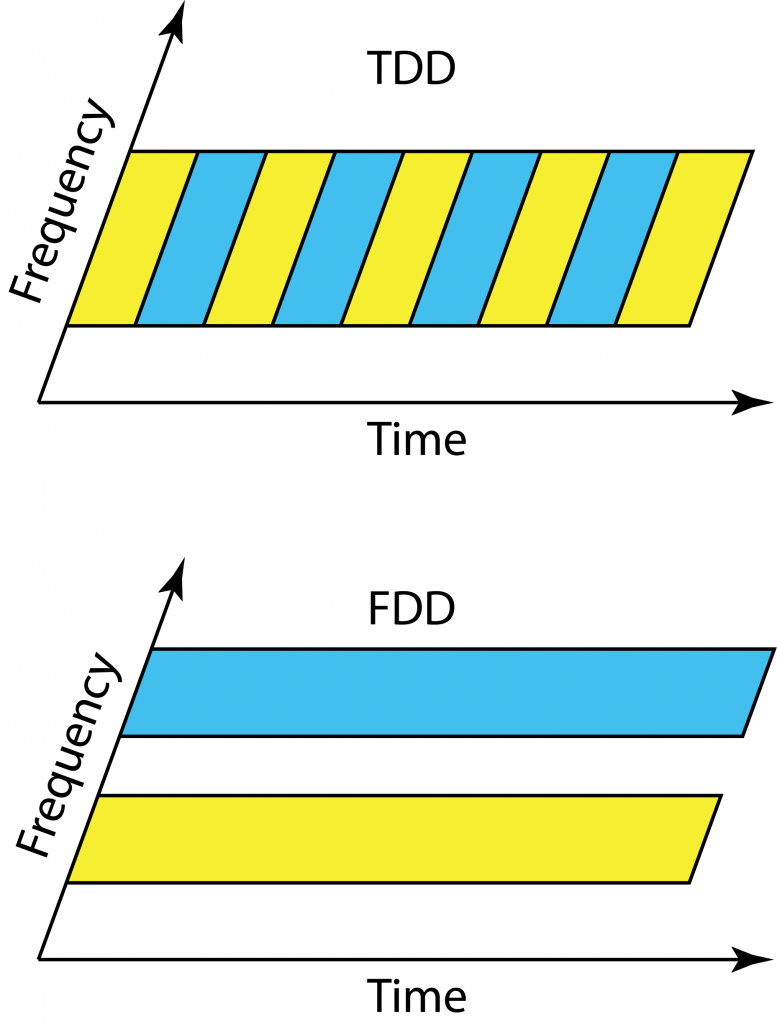

LTE was designed to work equally well in time-division duplex (TDD) and frequency division duplex (FDD) mode, so that operators could choose their mode of operation depending on their spectrum licenses. In contrast, Massive MIMO clearly works at its best in TDD, since the pilot overhead is prohibitive in FDD (even if there are some potential solutions that partially overcome this issue).

LTE was designed to work equally well in time-division duplex (TDD) and frequency division duplex (FDD) mode, so that operators could choose their mode of operation depending on their spectrum licenses. In contrast, Massive MIMO clearly works at its best in TDD, since the pilot overhead is prohibitive in FDD (even if there are some potential solutions that partially overcome this issue).

Clearly, we will see a larger focus on TDD in future networks, but there are some traditional disadvantages with TDD that we need to bear in mind when designing these networks. I describe the three main ones below.

Link budget

Even if we allocate the same amount of time-frequency resources to uplink and downlink in TDD and FDD operation, there is an important difference. We transmit over half the bandwidth all the time in FDD, while we transmit over the whole bandwidth half of the time in TDD. Since the power amplifier is only active half of the time, if the peak power is the same, the average radiated power is effectively cut in half. This means that the SNR is 3 dB lower in TDD than in FDD, when transmitting at maximum peak power.

Massive MIMO systems are generally interference-limited and uses power control to assign a reduced transmit power to most users, thus the impact of the 3 dB SNR loss at maximum peak power is immaterial in many cases. However, there will always be some unfortunate low-SNR users (e.g., at the cell edge) that would like to communicate at maximum peak power in both uplink and downlink, and therefore suffer from the 3 dB SNR loss. If these users are still able to connect to the base station, the beamforming gain provided by Massive MIMO will probably more than compensate for the loss in link budget as compared single-antenna systems. One can discuss if it should be the peak power or average radiated power that is constrained in practice.

Guard period

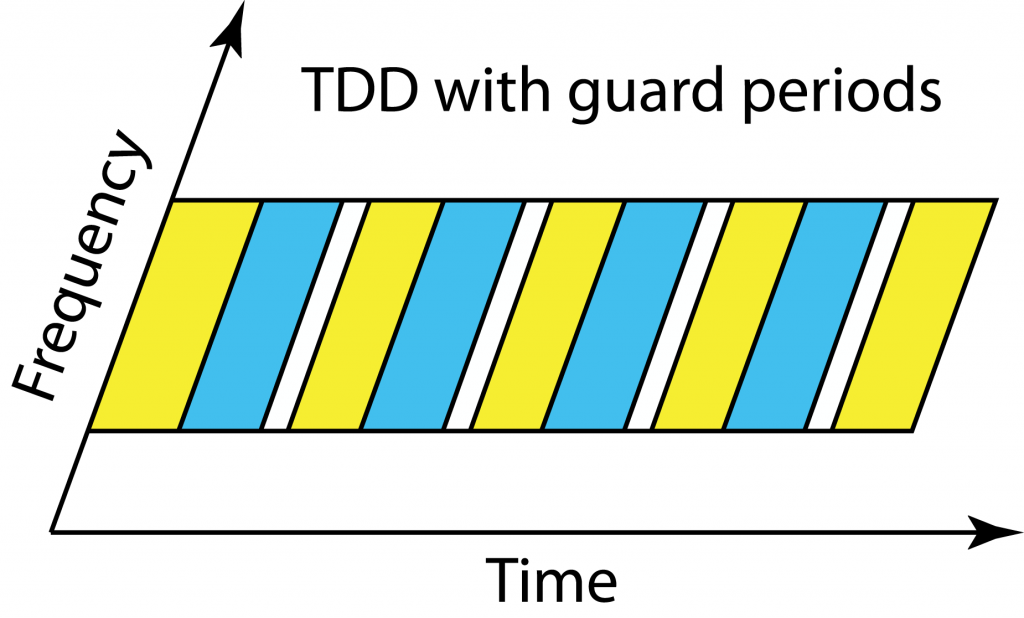

Everyone in the cell should operate in uplink and downlink mode at the same time in TDD. Since the users are at different distances from the base station and have different delay spreads, they will receive the end of the downlink transmission block at different time instances. If a cell center user starts to transmit in the uplink immediately after receiving the full downlink block, then users at the cell edge will receive a combination of the delayed downlink transmission and the cell center users’ uplink transmissions. To avoid such uplink-downlink interference, there is a guard period in TDD so that all users wait with uplink transmission until the outmost users are done with the downlink.

Everyone in the cell should operate in uplink and downlink mode at the same time in TDD. Since the users are at different distances from the base station and have different delay spreads, they will receive the end of the downlink transmission block at different time instances. If a cell center user starts to transmit in the uplink immediately after receiving the full downlink block, then users at the cell edge will receive a combination of the delayed downlink transmission and the cell center users’ uplink transmissions. To avoid such uplink-downlink interference, there is a guard period in TDD so that all users wait with uplink transmission until the outmost users are done with the downlink.

In fact, the base station gives every user a timing bias to make sure that when the uplink commences, the users’ uplink signals are received in a time-synchronized fashion at the base station. Therefore, the outmost users will start transmitting in the uplink before the cell center users. Thanks to this feature, the largest guard period is needed when switching from downlink to uplink, while the uplink to downlink switching period can be short. This is positive for Massive MIMO operation since we want to use uplink CSI in the next downlink block, but not the other way around.

The guard period in TDD must become larger when the cell size increases, meaning that a larger fraction of the transmission resources disappears. Since no guard periods are needed in FDD, the largest benefits of TDD will be seen in urban scenarios where the macro cells have a radius of a few hundred meters and the delay spread is short.

Inter-cell synchronization

We want to avoid interference between uplink and downlink within a cell and the same thing applies for the inter-cell interference. The base stations in different cells should be fairly time-synchronized so that the uplink and downlink take place at the same time; otherwise, it might happen that a cell-edge user receives a downlink signal from its own base station and is interfered by the uplink transmission from a neighboring user that connects to another base station.

This can also be an issue between telecom operators that use neighboring frequency bands. There are strict regulations on the permitted out-of-band radiation, but the out-of-band interference can anyway be larger than the desired inband signal if the interferer is very close to the receiving inband user. Hence, it is preferred that the telecom operators are also synchronizing their switching between uplink and downlink.

Summary

Massive MIMO will bring great gains in spectral efficiency in future cellular networks, but we should not forget about the traditional disadvantages of TDD operation: 3 dB loss in SNR at peak power transmission, larger guard periods in larger cells, and time synchronization between neighboring base stations.

Thanks for your post,

I have one confusion about “Link budget”. I didn’t understand why we have 3dB difference. Basically, if we compare the transmission of FDD and TDD, FDD has a smaller bandwidth, while TDD is using two times more bandwidth. However, this is the other way around in time resources. So, it seems for me that with a fix peak power, both should have same SNR. In the simplest case, I assume that PA in FDD and TDD are active with P_max. So, the received power by the user is the same for TDD or FDD equal to (P_max.BW)/2. Can you please help me where I’m making the mistake…?

Is the AWGN noise at receiver causing the 3dB difference for SNR due to having a larger bandwidth for the TDD compared to FDD…?

This is a good question, which is not so easy to grasp. Let’s use the following notation: P is the peak power, N_0 is the noise power spectral density, and B is the total bandwidth.

Let’s compute the signal energy and noise energy over one second and compute the ratio of them to get the SNR.

In FDD: The signal energy is P*1, the noise energy is (B/2)*N_0*1. The SNR is 2*P/(B*N_0).

In TDD: The signal energy is P*0.5, the noise energy is B*N_0*0.5. The SNR is P/(B*N_0).

There is a difference by a factor 2, which is 3 dB. While the noise energy is the same in both cases, the signal energy is different since the power amplifier is only active half of the time.

Great work sir.

Thanks

This is explained on page 16 of Fundamentals of Massive MIMO.

http://www.cambridge.org/gr/academic/subjects/engineering/wireless-communications/fundamentals-massive-mimo?format=HB#zEfleREqh63j8jxc.97

Thanks for throwing light on these basics. A good read!!

Could also please elaborate on the RxBN problem that FDD cell phones face. I think we need filters on board which may not be a problem with TDD, making the cell phones potentially costlier

The uplink and downlink bands are usually well separated in frequency so it should be fairly easy to filter out interference from the transmitter to the receiver part. Most cell phones are already operating in FDD so this is not a big issue.

How to take the signal detection in the frequency domain?

The typical approach is to detect one codeword at the time, where the length of the codeword depends on the coding scheme. Depending on the modulation scheme, the codeword will span a particular time interval and frequency interval.

@Emil Björnson: Thank you very much for your nice explanation 🙂

@Erik G. Larsson: Thanks, I looked it up in your book 🙂

Thank you for the informative post.

May I ask a question on the frame structure?

Here are two options:

uplink pilot, uplink data, downlink data, guard period,.. (1)

or

uplink data, uplink pilot, downlink data, guard period,.. (2)

Do you think (1) or (2) is the better?

Option (2) is theoretically more appealing since the time difference between the channel estimation and the transmission of the last downlink data is smaller, thus the channel estimates will be less outdated. However, you will have to buffer all the uplink data before you can decode it using the uplink pilot and you will have little time to compute a good precoder using the uplink pilot. Option (1) don’t have the last two issues.

In summary, there is no definitive answer to your question.

Thank you for the informative post. I have slightly off-topic question regarding the differences in the propagation delay for different users. Assuming that all the users transmit orthogonal pilots, do different delays affect this orthogonality when the signals arrive the base station (as the synchronization would be invalid)? If yes, what is the solution for this?

Yes, there are different propagation delays for different users, but the system will make sure to time-synchronize them so that the orthogonality of pilots still remain. This is called “timing advance”. Users close to the base station start transmitting later than those who are further away. In an OFDM system, the synchronization needs be sufficiently accurate to make the remaining time-differences smaller than the duration of the cyclic prefix.

Thanks a lot. I have one more question regarding the utilization of OFDM for uplink training.

OFDM implies high PAPR, which might be difficult to realize in user terminals due to hardware constraints as far as I know. Are other transmission techniques, such as TDM, FDM or CDM, feasible for uplink training ? Do they have significant disadvantages compared to OFDM under realistic channel conditions e.g. frequency selective channel, sync issues ?

OFDM is going to be used in the uplink of 5G, so apparently this is no longer considered a major issue. It is true that OFDM has a fairly high PAPR, but it is not that much higher than alternatives such as single-carrier transmission (see Figure 1 in https://arxiv.org/pdf/1510.01397.pdf). What really want to achieve with Massive MIMO is high spectral efficiency through spatial multiplexing, so TDM/FDM/CDM are not feasible alternatives in that respect.

Nice, but what is

1. uplink pilot

2. uplink data

3. downlink data

4. BS processing

for massive TDD protocol?

I explain these things in detail in Section 2 of my book Massive MIMO Networks, which you can download from massivemimobook.com