One of the main impairments in wireless communications is small-scale channel fading. This refers to random fluctuations in the channel gain, which are caused by microscopic changes in the propagation environments. The fluctuations make the channel unreliable, since occasionally the channel gain is very small and the transmitted data is then received in error.

The diversity achieved by sending a signal over multiple channels with independent realizations is key to combating small-scale fading. Spatial diversity is particularly attractive, since it can be obtained by simply having multiple antennas at the transmitter or the receiver. Suppose the probability of a bad channel gain realization is p. If we have M antennas with independent channel gains, then the risk that all of them are bad is pM. For example, with p=0.1, there is a 10% risk of getting a bad channel in a single-antenna system and a 0.000001% risk in an 8-antenna system. This shows that just a few antennas can be sufficient to greatly improve reliability.

In Massive MIMO systems, with a “massive” number of antennas at the base station, the spatial diversity also leads to something called “channel hardening”. This terminology was used already in a paper from 2004:

M. Hochwald, T. L. Marzetta, and V. Tarokh, “Multiple-antenna channel hardening and its implications for rate feedback and scheduling,” IEEE Transactions on Information Theory, vol. 50, no. 9, pp. 1893–1909, 2004.

In short, channel hardening means that a fading channel behaves as if it was a non-fading channel. The randomness is still there but its impact on the communication is negligible. In the 2004 paper, the hardening is measured by dividing the instantaneous supported data rate with the fading-averaged data rate. If the relative fluctuations are small, then the channel has hardened.

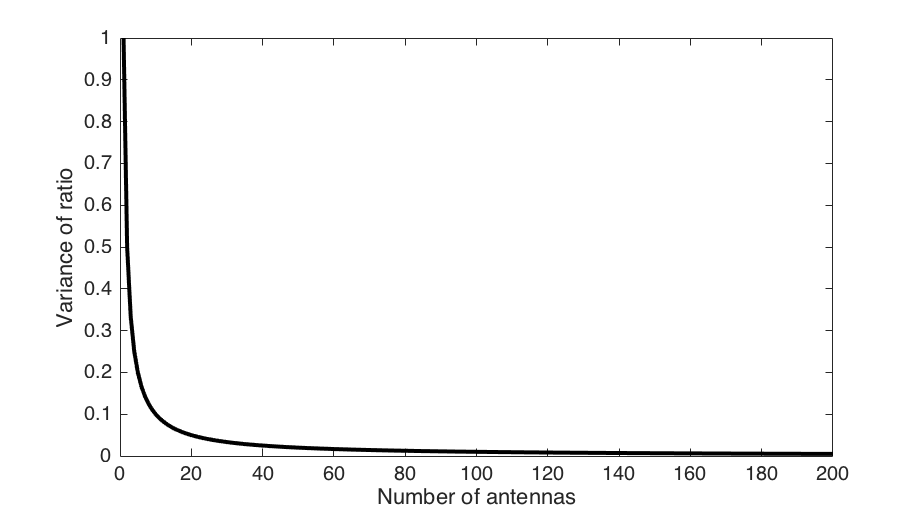

Since Massive MIMO systems contain random interference, it is usually the hardening of the channel that the desired signal propagates over that is studied. If the channel is described by a random M-dimensional vector h, then the ratio ||h||2/E{||h||2} between the instantaneous channel gain and its average is considered. If the fluctuations of the ratio are small, then there is channel hardening. With an independent Rayleigh fading channel, the variance of the ratio reduces with the number of antennas as 1/M. The intuition is that the channel fluctuations average out over the antennas. A detailed analysis is available in a recent paper.

The figure above shows how the variance of ||h||2/E{||h||2} decays with the number of antennas. The convergence towards zero is gradual and so is the channel hardening effect. I personally think that you need at least M=50 to truly benefit from channel hardening.

Channel hardening has several practical implications. One is the improved reliability of having a nearly deterministic channel, which results in lower latency. Another is the lack of scheduling diversity; that is, one cannot schedule users when their ||h||2 are unusually large, since the fluctuations are small. There is also little to gain from estimating the current realization of ||h||2, since it is relatively close to its average value. This can alleviate the need for downlink pilots in Massive MIMO.

I regularly get the question “are there any Massive MIMO books?”. So far my answer has always been “no”, but now I can finally give a positive answer.

I regularly get the question “are there any Massive MIMO books?”. So far my answer has always been “no”, but now I can finally give a positive answer.