Contemporary base stations are equipped with analog-to-digital converters (ADCs) that take samples described by 12-16 bits. Since the communication bandwidth is up to 100 MHz in LTE Advanced, a sampling rate of a 500 Msample/s is quite sufficient for the ADC. The power consumption of such an ADC is at the order of 1 W. Hence, in a Massive MIMO base station with 100 antennas, the ADCs would consume around 100 W!

Fortunately, the 1600 bit/sample that are effectively produced by 100 16-bit ADCs are much more than what is needed to communicate at practical SINRs. For this reason, there is plenty of research on Massive MIMO base stations equipped with lower-resolution ADCs. The use of 1-bit ADCs has received particular attention. Some good paper references are provided in a previous blog post: Are 1-bit ADCs sufficient? While many early works considered narrowband channels, recent papers (e.g., Quantized massive MU-MIMO-OFDM uplink) have demonstrated that 1-bit ADCs can also be used in practical frequency-selective wideband channels. I’m impressed by the analytical depth of these papers, but I don’t think it is practically meaningful to use 1-bit ADCs.

Fortunately, the 1600 bit/sample that are effectively produced by 100 16-bit ADCs are much more than what is needed to communicate at practical SINRs. For this reason, there is plenty of research on Massive MIMO base stations equipped with lower-resolution ADCs. The use of 1-bit ADCs has received particular attention. Some good paper references are provided in a previous blog post: Are 1-bit ADCs sufficient? While many early works considered narrowband channels, recent papers (e.g., Quantized massive MU-MIMO-OFDM uplink) have demonstrated that 1-bit ADCs can also be used in practical frequency-selective wideband channels. I’m impressed by the analytical depth of these papers, but I don’t think it is practically meaningful to use 1-bit ADCs.

Do we really need 1-bit ADCs?

I think the answer is no in most situations. The reason is that ADCs with a resolution of around 6 bits strike a much better balance between communication performance and power consumption. The state-of-the-art 6-bit ADCs are already very energy-efficient. For example, the paper “A 5.5mW 6b 5GS/S 4×-lnterleaved 3b/cycle SAR ADC in 65nm CMOS” from ISSCC 2015 describes a 6-bit ADC that consumes 5.5 mW and has a huge sampling rate of 5 Gsample/s, which is sufficient even for extreme mmWave applications with 1 GHz of bandwidth. In a base station equipped with 100 of these 6-bit ADCs, less than 1 W is consumed by the ADCs. That will likely be a negligible factor in the total power consumption of any base station, so what is the point in using a lower resolution than that?

The use of 1-bit ADCs comes with a substantial loss in communication rate. In contrast, there is a consensus that Massive MIMO with 3-5 bits per ADC performs very close to the unquantized case (see Paper 1, Paper 2, Paper 3, Paper 4, Paper 5). The same applies for 6-bit ADCs, which provide an additional margin that protects against strong interference. Note that there is nothing magical with 6-bit ADCs; maybe 5-bit or 7-bit ADCs will be even better, but I don’t think it is meaningful to use 1-bit ADCs.

Will 1-bit ADCs ever become useful?

To select a 1-bit ADC, instead of an ADC with higher resolution, the energy consumption of the receiving device must be extremely constrained. I don’t think that will ever be the case in base stations, because the power amplifiers are dominating their energy consumption. However, the case might be different for internet-of-things devices that are supposed to run for ten years on the same battery. To make 1-bit ADCs meaningful, we need to greatly simplify all the other hardware components as well. One potential approach is to make a dedicated spatial-temporal waveform design, as described in this paper.

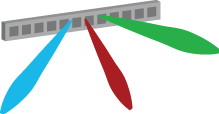

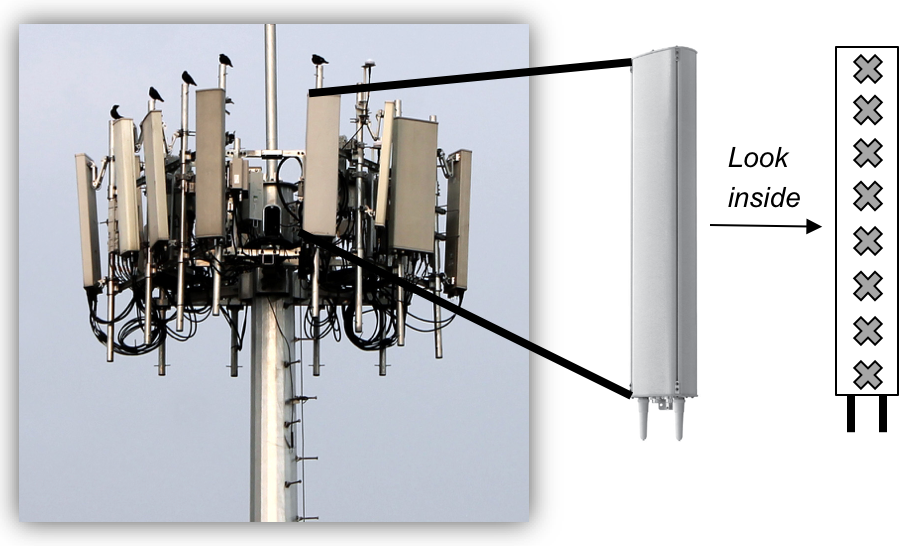

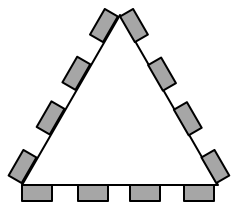

The picture above shows a real LTE site that I found in Nanjing, China, a couple of years ago. Looking at it from above, the site is structured as illustrated to the right. The site consists of three sectors, each containing a base station with four vertical panels. If you would look inside one of the panels, you will (probably) find 8 cross-polarized vertically stacked radiating elements, as illustrated in Figure 1. There are two RF input signals per panel, one per polarization, thus each panel acts as two antennas. This is how LTE with 8TX-sectors is deployed: 4 panels with dual polarization per base station.

The picture above shows a real LTE site that I found in Nanjing, China, a couple of years ago. Looking at it from above, the site is structured as illustrated to the right. The site consists of three sectors, each containing a base station with four vertical panels. If you would look inside one of the panels, you will (probably) find 8 cross-polarized vertically stacked radiating elements, as illustrated in Figure 1. There are two RF input signals per panel, one per polarization, thus each panel acts as two antennas. This is how LTE with 8TX-sectors is deployed: 4 panels with dual polarization per base station.

The hardback version of the massive

The hardback version of the massive