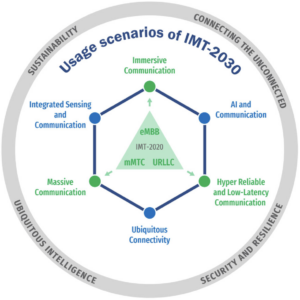

“6G should be for the many, not the few” is the final sentence of the book The 6G Manifesto by William Webb (published in October 2024). He presents a vision for the evolution of wireless communication technology driven by the goal of providing everyone with sufficiently fast connectivity to support their applications everywhere and at any time. This design goal was uncommon eight years ago when Webb described it in his book, “The 5G Myth“, which I reviewed previously. However, it has become quite mainstream since then. “Uniformly great service for everyone” [Marzetta, 2015] is the main motivation behind the research into Cell-free Massive MIMO systems. Ericsson uses the term limitless connectivity for its 6G vision that “mobile networks deliver limitless connectivity for all applications, allowing anyone and anything to connect truly anywhere and at any time“. The International Telecommunication Union (ITU) has dedicated one of its official 6G usage scenarios to ubiquitous connectivity, which should “provide digital inclusion for all by meaningfully connecting the rural and remote communities, further extending into sparsely populated areas, and maintaining the consistency of user experience between different locations including deep indoor coverage.” [Recommendation ITU-R M.2160-0, 2023]

The unique and interesting contribution of the book is the proposed way to realize the vision. The author claims that we already have the necessary network equipment to provide almost ubiquitous connectivity, but it is unavailable to the users because of poor system integration. Today, each device is subscribed to one out of multiple cellular networks, connected to at most one out of many WiFi networks, and seldom capable of using direct-to-satellite connectivity. Webb’s central claim is that we would reach far toward the goal of ubiquitous connectivity if each device would always be connected to the “best” network (cellular, WiFi, satellites, etc.) among the ones that can be reached at the given location. This approach makes intuitive sense if one recalls that your phone can often present a long list of WiFi networks (to which you lack the passwords) and that only emergency calls are possible in some insufficient coverage situations (those are done through another cellular network).

Some coverage holes will remain even if each device can seamlessly connect to any available network. Webb categorizes these challenges and suggests varying solutions. Rural coverage gaps can potentially be filled by using more cell towers, high-altitude platforms, and satellite connectivity. Urban not-spots can be removed by selective deployment of small cells. Issues in public transportation can be addressed by leaky feeders in tunnels and in-train WiFi connected to cellular networks through antennas deployed outside.

Enabling Multi-Network Coordination

Letting any device connect to any available wireless network is easier said than done. There are legal, commercial, and safety issues to overcome in practice, and no concrete solutions are provided in the book. Instead, the book focuses on the technological level, particularly how to control which network a device is connected to and manage handovers between networks. Webb argues that the device cannot decide what network to use because it lacks sufficient knowledge about them. Similarly, the mobile network operator the user is subscribed to cannot decide because it has limited knowledge of other networks. Hence, the proposed solution is to create a centralized multi-network coordinator, e.g., at the national level. This entity would act as a middleman with detailed knowledge about all networks so it can control the connectivity of all devices. The QUIC transport-layer protocol is suggested to be used to minimize the interruptions in data transfer when devices are moved between networks.

There are already limited-scale attempts to do what Webb suggests. One example is the eduroam system, which runs across thousands of research and education institutes. Once a device is connected to one such WiFi network, it will automatically connect to any of them. Another example is how recent iPhone products have been able to send emergency messages over satellites without requiring a subscription. Many phones can also use WiFi and cellular links simultaneously to make the connectivity more stable but at the price of shorter battery life. A third example is the company Icomera, which sells an aggregation platform for trains that integrates cellular and satellite links from multiple network operators to provide more stable connectivity for travelers.

The practical benefits of having a multi-network coordinator are clear and convincing. However, there is a risk that the computations and overhead signaling required to operate this coordination entity at scale will be enormous; thus, further research and development are required. The book will hopefully motivate researchers, businesses, and agencies to look deeper into these issues.

Poorly Substantiated Claims

The main issue with the book is how it promotes its interesting vision by making some poorly substantiated claims and poking fun at the mainstream 6G visions, which it calls “5G-on-steroids”. Two chapters are dedicated to presenting and discussing cherry-picked statements from white papers by manufacturers and academics to give the impression that they are advocating something entirely different from what the book does. The fact that ubiquitous connectivity is one of the six 6G goals from the ITU is overlooked (see the “wheel diagram”). It is easy to find alternative quotes from Ericsson, Huawei, Nokia, Samsung, and academia that support the author’s vision, but he chose not to include them.

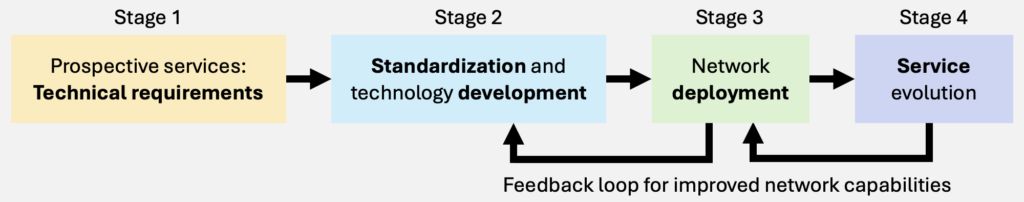

The “5G-on-steriods” moniker is used to ridicule the need for more capable 6G networks, in terms of bit rates, latency, and network capacity. One part of the argument is: “As seen with 5G, there are no new applications on the horizon, and even if there were 5G is capable of handling all of them. Operators do not want 6G because they perceive it will result in expense for them for no benefit.” This is a strange claim because operators are free to choose what technology and functionalities to deploy based on their business plans. In the 5G era, they have so far mainly deployed non-standalone 5G networks to manage the increasing data demand in their networks. The extra functionalities provided by standalone 5G networks (i.e., by adding a 5G core) are meant to create new revenue. Since most people already have cellphone subscriptions, new revenue streams require creating new services and devices for businesses or people. These things take time but remain on the horizon, as I discussed in a blog post about the current 5G disappointment.

Even in a pessimistic situation where no new monetizable services arise, one would think that the operators need more capacity in their networks since the worldwide data traffic grows year after year. However, based on the observation that the traffic growth rate has decayed for the last few years, the book claims that the data traffic will plateau before the end of the decade. No convincing evidence is provided to support this claim, but the author only refers to his previous book as if it establishes it as a fact. I hypothesize that the wireless traffic growth rate will converge to the overall Internet traffic growth value (it was 17% in 2024) because wireless technology is becoming deeply integrated into the general connectivity ecosystem, and the traffic will continue to grow just as the utilization of most other resources on Earth. To be fair, these are just two different speculations/predictions of the future, so we must wait and see what happens. The main issue is that the book uses the zero-traffic-growth assumption as the main argument for why the only problem that remains to be solved in the telecom world is global coverage, which is a shaky ground to build on.

Another peculiar claim in the book is that the 5G air interface was “anything but new” because it remained built on OFDM. This is ignorant of the fact that non-standalone 5G is all about exploiting Massive MIMO (an air interface breakthrough) to enable higher bitrates and cell capacity through spatial multiplexing, in addition to the extra bandwidth. This overlook becomes particularly clear when the book discusses how 6G might reach 10 times higher data rates than 5G. It is argued that 10 times more bandwidth is needed, which can only be found at much higher carrier frequencies where many more base stations are needed so that the deployment cost will grow rapidly. This chain of arguments is challenged by the fact that one can alternatively achieve 10x by using 2.5 times more spectrum and 4 times more MIMO layers, which is a plausible situation in the upper mid-band without the need for major densification.

My Verdict

The 6G Manifesto presents a compelling vision for reaching globally ubiquitous network coverage by first letting any devices connect to any wireless network already in place. A centralized multi-network coordinator must be created to govern such a system. The remaining coverage holes could be filled by investing in new infrastructure that covers only the missing pieces. It is worth investigating whether it is a scalable solution with reasonable operational costs and if one can build a meaningful legal and commercial framework around it. However, when reading the book, one must keep in mind that the descriptions of the current situation, the prospects of creating new services that generate more traffic and revenue, and the mainstream 6G visions are shaky and adapted to fit the book’s narrative.