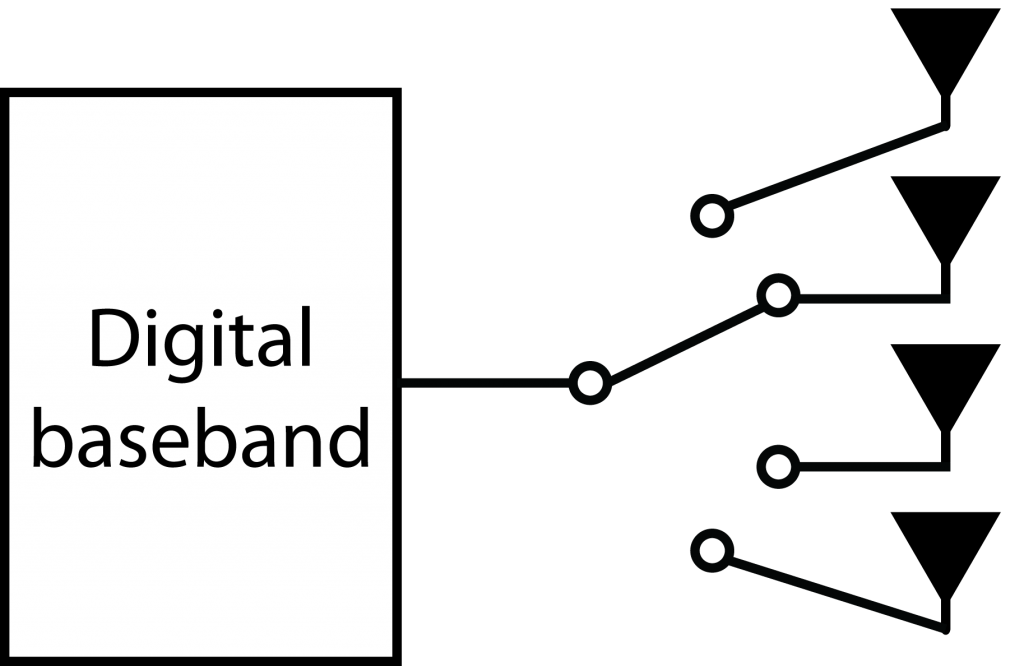

There has been a lot of fuss about hybrid analog-digital beamforming in the development of 5G. Strangely, it is not because of this technology’s merits but rather due to general disbelief in the telecom industry’s ability to build fully digital transceivers in frequency bands above 6 GHz. I find this rather odd; we are living in a society that becomes increasingly digitalized, with everything changing from being analog to digital. Why would the wireless technology suddenly move in the opposite direction?

When Marzetta published his seminal Massive MIMO paper in 2010, the idea of having an array with a hundred or more fully digital antennas was considered science fiction, or at least prohibitively costly and power consuming. Today, we know that Massive MIMO is actually a pre-5G technology, with 64-antenna systems already deployed in LTE systems operating below 6 GHz. These antenna panels are very commercially competitive; 95% of the base stations that Huawei are currently selling have at least 32 antennas. The fast technological development demonstrates that the initial skepticism against Massive MIMO was based on misconceptions rather than fundamental facts.

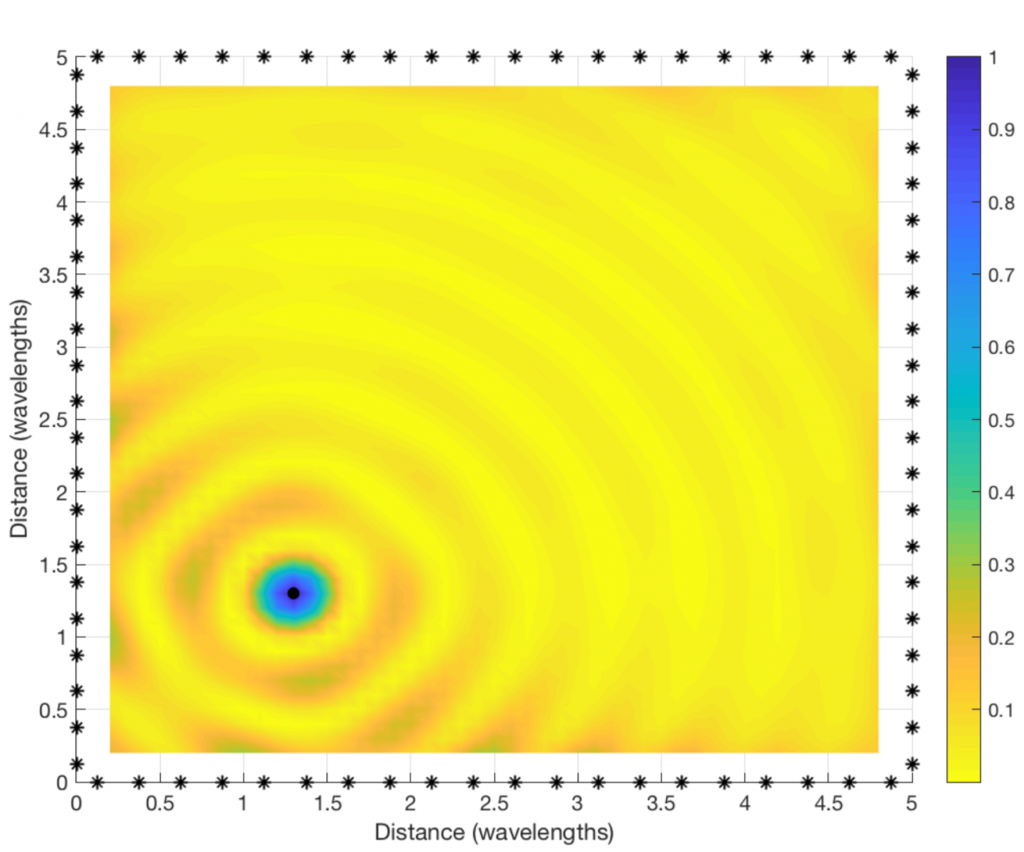

In the same way, there is nothing fundamental that prevents the development of fully digital transceivers in mmWave bands, but it is only a matter of time before such transceivers are developed and will dominate the market. With digital beamforming, we can get rid of the complicated beam-searching and beam-tracking algorithms that have been developed over the past five years and achieve a simpler and more reliable system operation, particularly, using TDD operation and reciprocity-based beamforming.

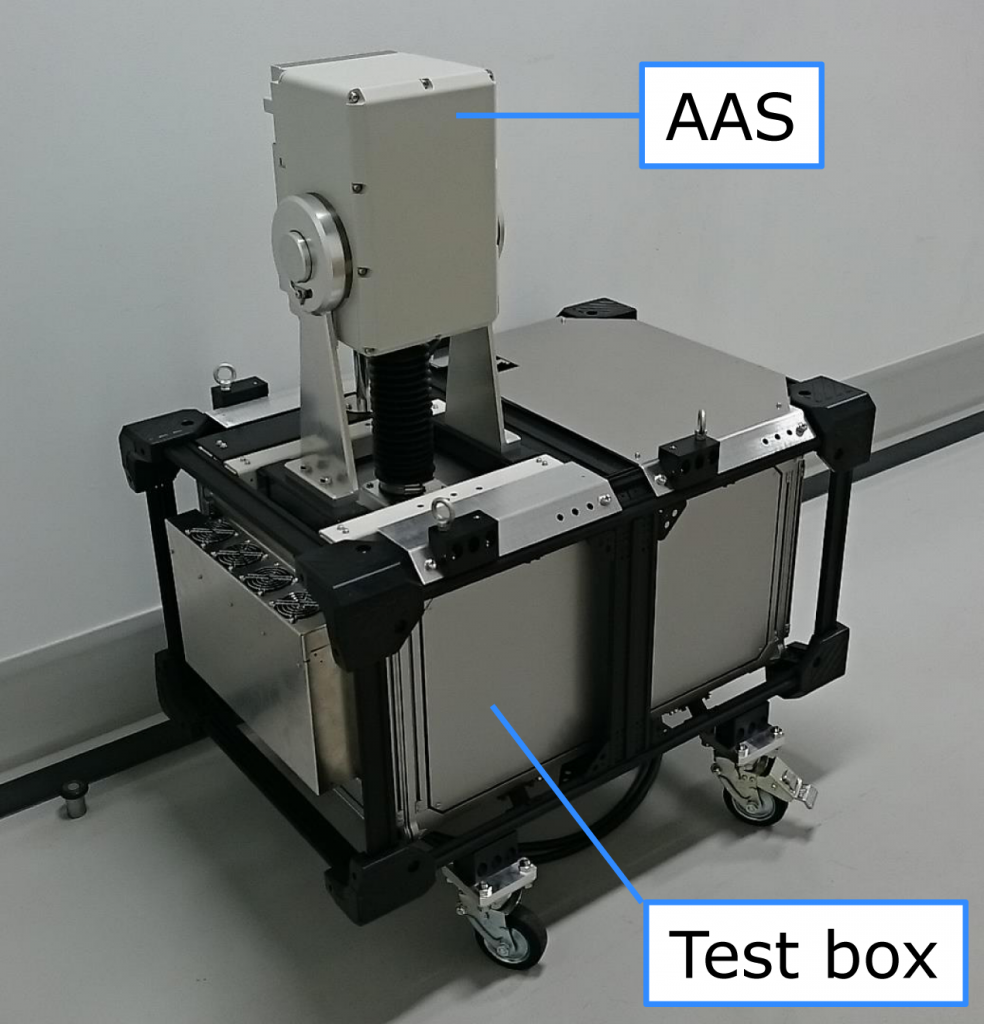

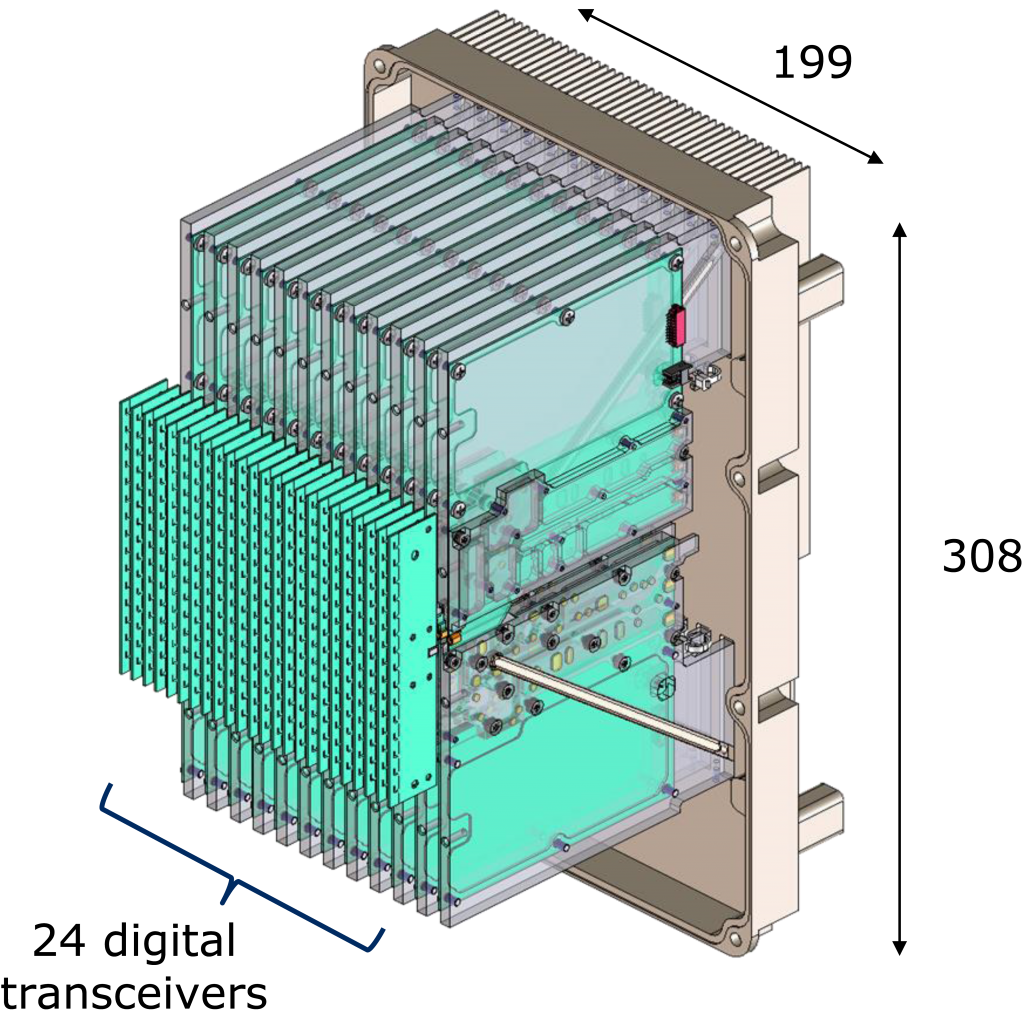

I didn’t jump onto the hybrid beamforming research train since it already had many passengers and I thought that this research topic would become irrelevant after 5-10 years. But I was wrong – it now seems that the digital solutions will be released much earlier than I thought. At the 2018 European Microwave Conference, NEC Cooperation presented an experimental verification of an active antenna system (AAS) for the 28 GHz band with 24 fully digital transceiver chains. The design is modular and consists of 24 horizontally stacked antennas, which means that the same design could be used for even larger arrays.

Tomoya Kaneko, Chief Advanced Technologist for RF Technologies Development at NEC, told me that they target to release a fully digital AAS in just a few years. So maybe hybrid analog-digital beamforming will be replaced by digital beamforming already in the beginning of the 5G mmWave deployments?

That said, I think that the hybrid beamforming algorithms will have new roles to play in the future. The first generations of new communication systems might reach faster to the market by using a hybrid analog-digital architecture, which require hybrid beamforming, than waiting for the fully digital implementation to be finalized. This could, for example, be the case for holographic beamforming or MIMO systems operating in the sub-THz bands. There will also remain to exist non-mobile point-to-point communication systems with line-of-sight channels (e.g., satellite communications) where analog solutions are quite enough to achieve all the necessary performance gains that MIMO can provide.