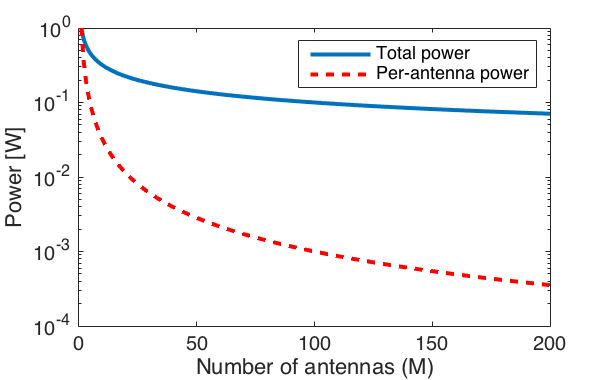

The received signal power is proportional to the number of antennas ![]() in Massive MIMO systems. This property is known as the array gain and it can basically be utilized in two different ways.

in Massive MIMO systems. This property is known as the array gain and it can basically be utilized in two different ways.

One option is to let the signal power become ![]() times larger than in a single-antenna reference scenario. The increase in SNR will then lead to higher data rates for the users. The gain can be anything from

times larger than in a single-antenna reference scenario. The increase in SNR will then lead to higher data rates for the users. The gain can be anything from ![]() bit/s/Hz to almost negligible, depending on how interference-limited the system is. Another option is to utilize the array gain to reduce the transmit power, to maintain the same SNR as in the reference scenario. The corresponding power saving can be very helpful to improve the energy efficiency of the system.

bit/s/Hz to almost negligible, depending on how interference-limited the system is. Another option is to utilize the array gain to reduce the transmit power, to maintain the same SNR as in the reference scenario. The corresponding power saving can be very helpful to improve the energy efficiency of the system.

In the uplink, with single-antenna user terminals, we can choose between these options. However, in the downlink, we might not have a choice. There are strict regulations on the permitted level of out-of-band radiation in practical systems. Since Massive MIMO uses downlink precoding, the transmitted signals from the base station have a stronger directivity than in the single-antenna reference scenario. The signal components that leak into the bands adjacent to the intended frequency band will then also be more directive.

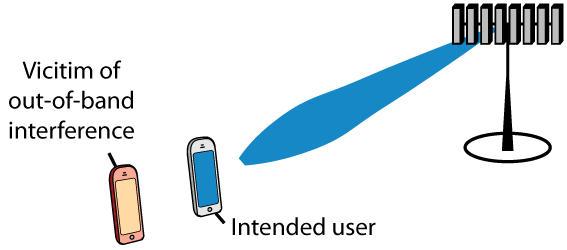

For example, consider a line-of-sight scenario where the precoding creates an angular beam towards the intended user (as illustrated in the figure below). The out-of-band radiation will then get a similar angular directivity and lead to larger interference to systems operating in adjacent bands, if their receivers are close to the user (as the victim in the figure below). To counteract this effect, our only choice might be to reduce the downlink transmit power to keep the worst-case out-of-band radiation constant.

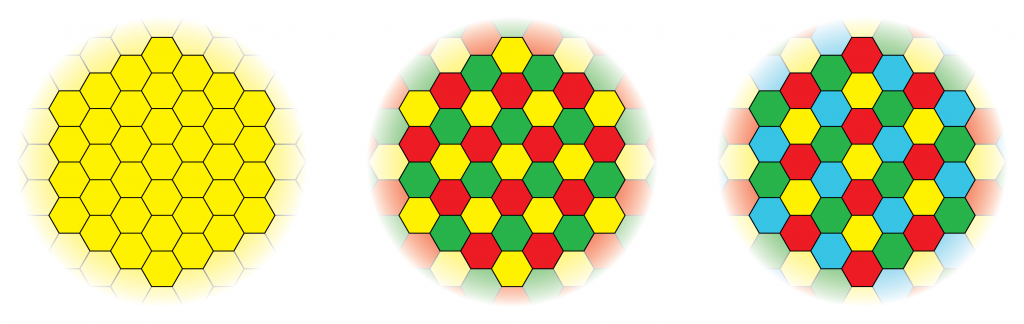

Another alternative is that the regulations are made more flexible with respect to precoded transmissions. The probability that a receiver in an adjacent band is hit by an interfering out-of-band beam, such that the interference becomes ![]() times larger than in the reference scenario, reduces with an increasing number of antennas since the beams are narrower. Hence, if one can allow for beamformed out-of-band interference if it occurs with sufficiently low probability, the array gain in Massive MIMO can still be utilized to increase the SNRs. A third option will then be to (partially) reduce the transmit power to also allow for relaxed linearity requirements of the hardware.

times larger than in the reference scenario, reduces with an increasing number of antennas since the beams are narrower. Hence, if one can allow for beamformed out-of-band interference if it occurs with sufficiently low probability, the array gain in Massive MIMO can still be utilized to increase the SNRs. A third option will then be to (partially) reduce the transmit power to also allow for relaxed linearity requirements of the hardware.

These considerations are nicely discussed in an overview article that appeared on ArXiv earlier this year. There are also two papers that analyze the impact of out-of-bound radiation in Massive MIMO: Paper 1 and Paper 2.

The 5G Myth is the provocative title of a recent book by

The 5G Myth is the provocative title of a recent book by