The 5G Myth is the provocative title of a recent book by William Webb, CEO of Weightless SIG, a standard body for IoT/M2M technology. In this book, the author tells a compelling story of a stagnating market for cellular communications, where the customers are generally satisfied with the data rates delivered by the 4G networks. The revenue growth for the mobile network operators (MNOs) is relatively low and also in decay, since the current services are so good that the customers are unwilling to pay more for improved service quality. Although many new wireless services have materialized over the past decade (e.g., video streaming, social networks, video calls, mobile payment, and location-based services), the MNOs have failed to take the leading role in any of them. Instead, the customers make use of external services (e.g., Youtube, Facebook, Skype, Apple Pay, and Google Maps) and only pay the MNOs to deliver the data bits.

The 5G Myth is the provocative title of a recent book by William Webb, CEO of Weightless SIG, a standard body for IoT/M2M technology. In this book, the author tells a compelling story of a stagnating market for cellular communications, where the customers are generally satisfied with the data rates delivered by the 4G networks. The revenue growth for the mobile network operators (MNOs) is relatively low and also in decay, since the current services are so good that the customers are unwilling to pay more for improved service quality. Although many new wireless services have materialized over the past decade (e.g., video streaming, social networks, video calls, mobile payment, and location-based services), the MNOs have failed to take the leading role in any of them. Instead, the customers make use of external services (e.g., Youtube, Facebook, Skype, Apple Pay, and Google Maps) and only pay the MNOs to deliver the data bits.

The author argues that, under these circumstances, the MNOs have little to gain from investing in 5G technology. Most customers are not asking for any of the envisaged 5G services and will not be inclined to pay extra for them. Webb even compares the situation with the prisoner’s dilemma: the MNOs would benefit the most from not investing in 5G, but they will anyway make investments to avoid a situation where customers switch to a competitor that has invested in 5G. The picture that Webb paints of 5G is rather pessimistic compared to a recent McKinsey report, where the more cost-efficient network operation is described as a key reason for MNOs to invest in 5G.

The author provides a refreshing description of the market for cellular communications, which is important in a time when the research community focuses more on broad 5G visions than on the customers’ actual needs. The book is thus a recommended read for 5G researchers, since we should all ask ourselves if we are developing a technology that tackles the right unsolved problems.

Webb does not only criticize the economic incentives for 5G deployment, but also the 5G visions and technologies in general. The claims are in many cases reasonable; for example, Webb accurately points out that most of the 5G performance goals are overly optimistic and probably only required by a tiny fraction of the user base. He also accurately points out that some “5G applications” already have a wireless solution (e.g., indoor IoT devices connected over WiFi) or should preferably be wired (e.g., ultra-reliable low-latency applications such as remote surgery).

However, it is also in this part of the book that the argumentation sometimes falls short. For example, Webb extrapolates a recent drop in traffic growth to claim that the global traffic volume will reach a plateau in 2027. It is plausible that the traffic growth rate will reduce as a larger and larger fraction of the global population gets access to wireless high-speed connections. But one should bear in mind that we have witnessed an exponential growth in wireless communication traffic for the past century (known as Cooper’s law), so this trend can just as well continue for a few more decades, potentially at a lower growth rate than in the past decade.

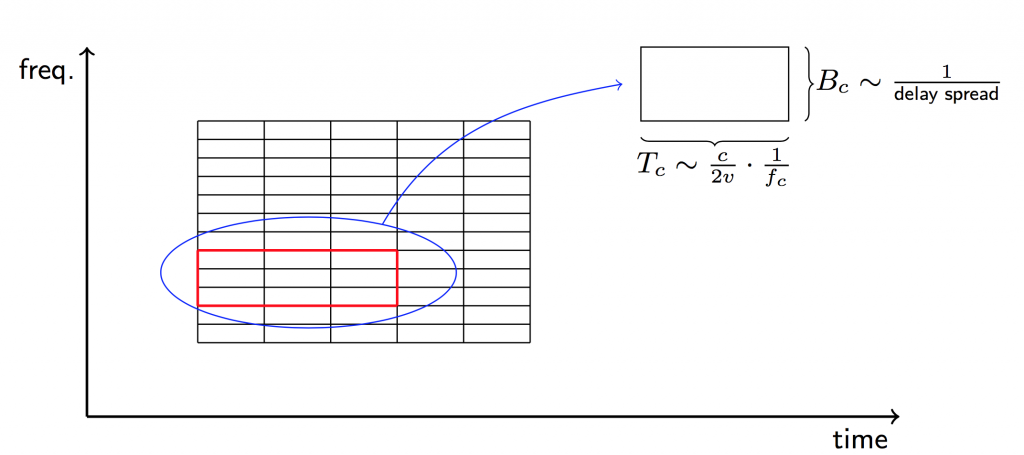

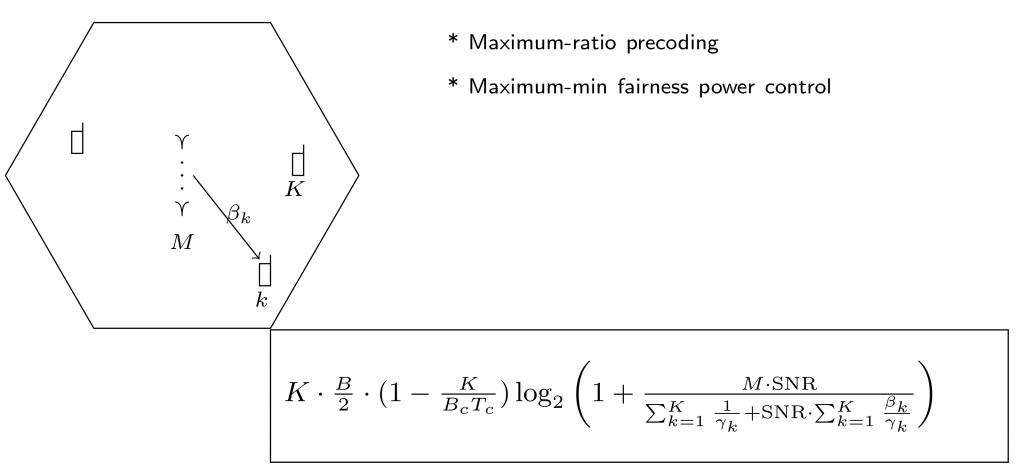

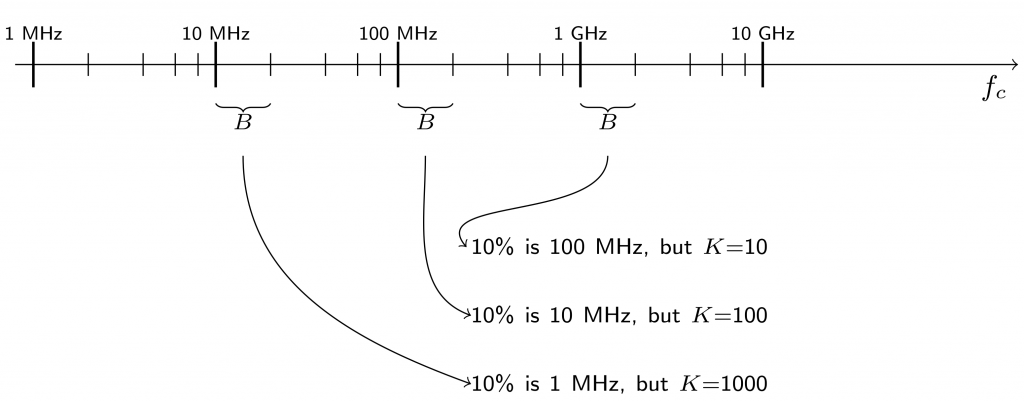

Webb also provides a misleading description of multiuser MIMO by claiming that 1) the antenna arrays would be unreasonable large at cellular frequencies and 2) the beamforming requires complicated angular beam-steering. These are two of the myths that we dispelled in the paper “Massive MIMO: Ten myths and one grand question” last year. In fact, testbeds have demonstrated that massive multiuser MIMO is feasible in lower frequency bands, and particularly useful to improve the spectral efficiency through coherent beamforming and spatial multiplexing of users. Reciprocity-based beamforming is a solution for mobile and cell-edge users, for which angular beam-steering indeed is inefficient.

The book is not as pessimistic about the future as it might seem from this review. Webb provides an alternative vision for future wireless communications, where consistent connectivity rather than higher peak rates is the main focus. This coincides with one of the 5G performance goals (i.e., 50 Mbit/s everywhere), but Webb advocates an extensive government-supported deployment of WiFi instead of 5G technology. The use WiFi is not a bad idea; I personally consume relatively little cellular data since WiFi is available at home, at work, and at many public locations in Sweden. However, the cellular services are necessary to realize the dream of consistent connectivity, particularly outdoors and when in motion. This is where a 5G cellular technology that delivers better coverage and higher data rates at the cell edge is highly desirable. Reciprocity-based Massive MIMO seems to be the solution that can deliver this, thus Webb would have had a stronger case if this technology was properly integrated into his vision.

In summary, the combination of 5G Massive MIMO for wide-area coverage and WiFi for local-area coverage might be the way to truly deliver consistent connectivity.

The 5G Myth is the provocative title of a recent book by

The 5G Myth is the provocative title of a recent book by