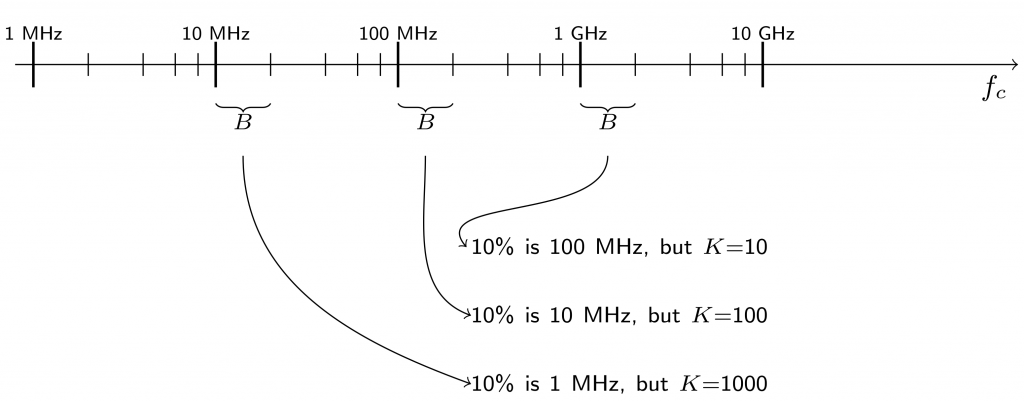

The Brooklyn summit last week was a great event. I gave a talk (here are the slides) comparing MIMO at “PCS” (2 GHz) and mmWave (60 GHz) in line-of-sight. There are two punchlines: first, scientifically, while a link budget calculation might predict that 128.000 mmWave antennas are needed to match up the performance of 128-antenna PCS MIMO, there is a countervailing effect in that increasing the number of antennas improves channel orthogonality so that only 10.000 antennas are required. Second, practically, although 10.000 is a lot less than 128.000, it is still a very large number! Here is a writeup with some more detail on the comparison.

I also touched the (for sub-5 GHz bands somewhat controversial) topic of hybrid beamforming, and whether that would reduce the required amount of hardware.

A question from the audience was whether the use of antennas with larger physical aperture (i.e., intrinsic directivity) would change the conclusions. The answer is no: the use of directional antennas is more or less equivalent to sectorization. The problem is that to exploit the intrinsic gain, the antennas must a priori point “in the right direction”. Hence, in the array, only a subset of the antennas will be useful when serving a particular terminal. This impacts both the channel gain (reduced effective aperture) and orthogonality (see, e.g, Figure 7.5 in this book).

There was also a stimulating panel discussion afterwards. One question discussed in the panel concerned the necessity, or desirability, of using multiple terminal antennas at mmWave. Looking only at the link budget, base station antennas could be traded against terminal antennas – however, that argument neglects the inevitably lost orthogonality, and furthermore it is not obvious how beam-finding/tracking algorithms will perform (millisecond coherence time at pedestrian speeds!). Also, obviously, the comparison I presented is extremely simplistic – to begin with, the line-of-sight scenario is extremely favorable for mmWaves (blocking problems), but also, I entirely neglected polarization losses. Solely any attempts to compensate for these problems are likely to require multiple terminal antennas.

Other topics touched in the panel were the viability of Massive MIMO implementations. Perhaps the most important comment in this context made was by Ian Wong of National Instruments: “In the past year, we’ve actually shown that [massive MIMO] works in reality … To me, the biggest development is that the skeptics are being quiet.” (Read more about that here.)