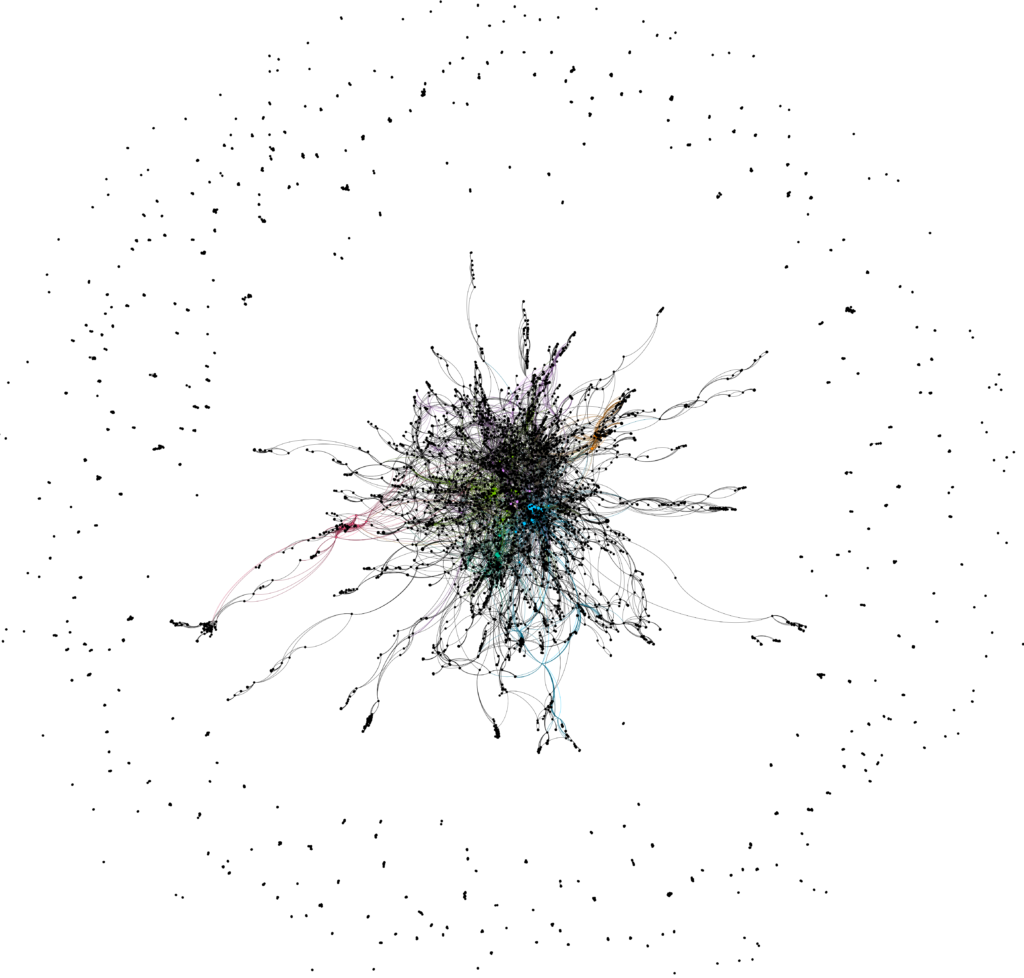

Scientific papers used to appear in journals once per month and in a few major conference proceedings per year. During the last decade, this has changed into a situation where new preprints appear on arXiv.org every day. The hope has been that this trend will lead to swifter scientific progress and a higher degree of openness towards the public. However, it is not necessary that any of these goals are being reached. The reputable academic journals are only accepting ⅓ of the submitted papers, often after substantial revisions, thus one can suspect that at least ⅔ of the preprints appearing on arXiv are of low quality. How should the general public be able to tell the difference between a solid and questionable paper when it is time-consuming even for researchers in the field to do so?

During the COVID-19 pandemic, the hope for scientific breakthroughs and guidance became so pressing that the media started to report results from preprints. For example, an early study from the Imperial College London indicated that a lockdown is by far the most effective measure to control the pandemic. This seemed to give scientific support for the draconian lockdown measures taken in many countries around the world, but the study has later been criticized for obtaining its conclusion from the model assumptions rather than the underlying data (see [1], [2], [3]). I cannot tell where the truth lies—the conclusion might eventually turn out to be valid even if the study was substandard. However, this is an example of why a faster scientific process is not necessarily leading to better scientific progress.

Publish or perish

After the big success of two survey papers that provided coherent 5G visions (What will 5G be? and Five disruptive technology directions for 5G), we have recently witnessed the rush to do the same for the next wireless generation. There are already 30+ papers competing to provide the “correct” 6G vision. Since the 6G research has barely started, the level of speculation is substantially higher than in the two aforementioned 5G papers, which appeared after several years of exploratory 5G research. Hence, it is important that we are not treating any of these 6G papers as actual roadmaps to the next wireless generation but just as different researchers’ rough long-term visions.

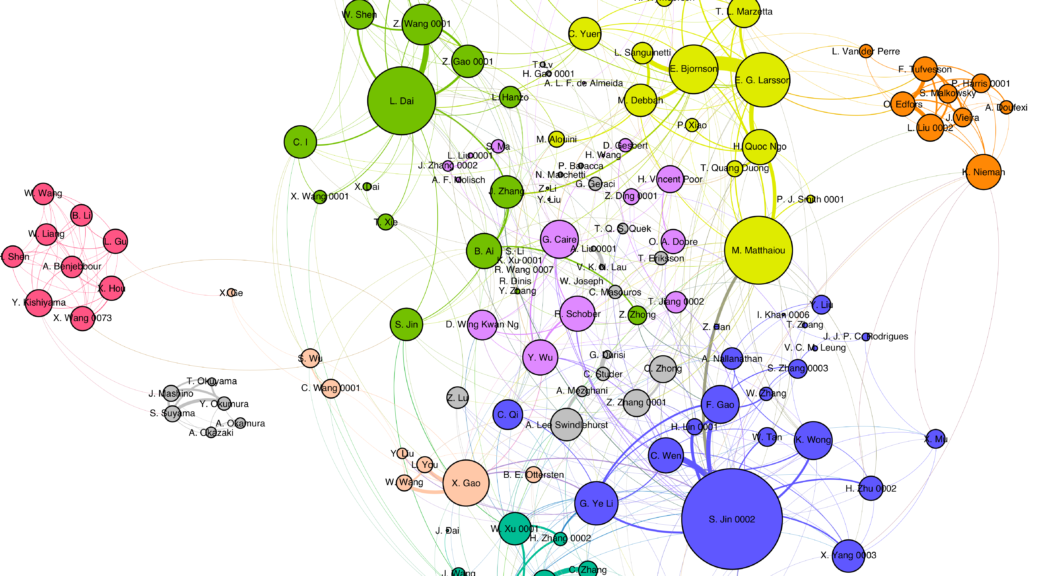

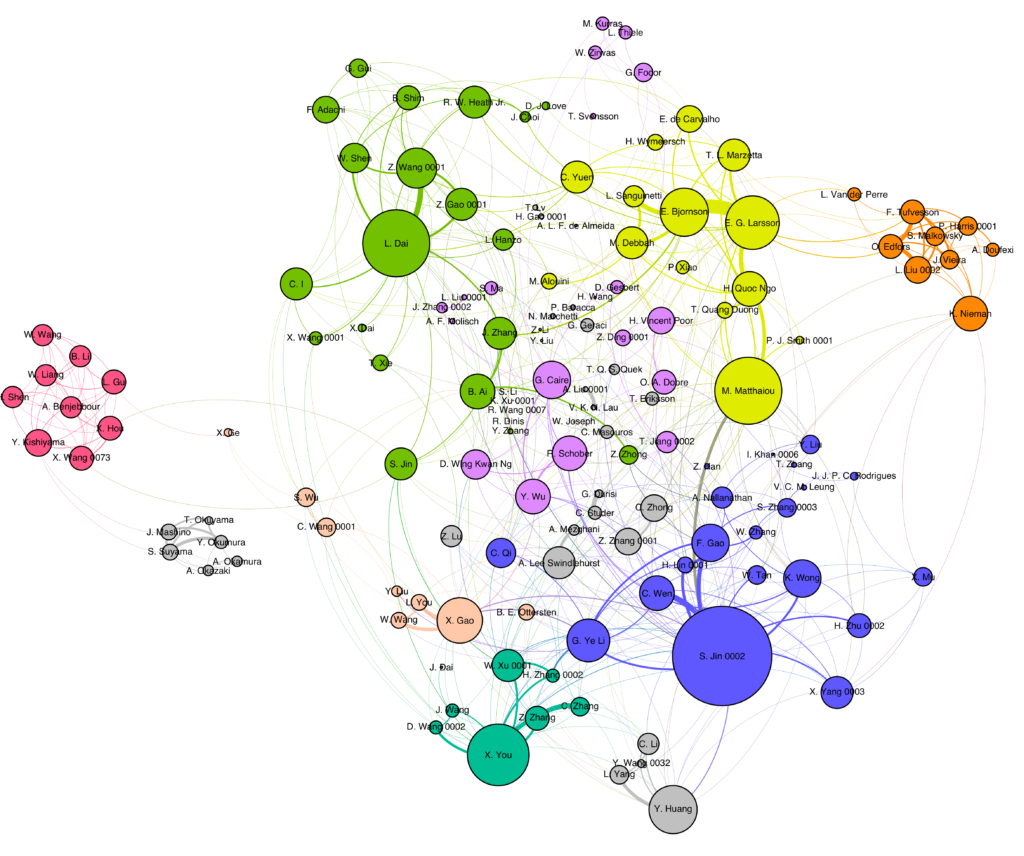

The abundance of 6G vision papers is a symptom of a wider trend: academic researchers are chasing citations and measurable impact at a level never seen before. The possibility of instantly uploading an unpublished manuscript to arXiv is encouraging researchers to be the first ones to formulate a new research problem and scratching on the surface of its solution, instead of patiently solving it first and then publish the results. The recent Best Readings in Reconfigurable Intelligent Surfaces page is an example of this issue: a large fraction of the listed papers are actually unpublished preprints! Since even published papers in this emerging research area contain basic misconceptions, I think we need to calm down and let papers go through review before treating them as recommended readings.

The more papers a researcher produces per year, the more the citation numbers can be inflated. This is the bad consequence of the “publish-or-perish” pressure that universities and funding agencies are putting on academic researchers. As long as quantitative bibliometrics (such as the number of papers and citations) are used to determine who will be tenured and who will receive research grants, we will encourage people to act in a scientifically undesired way: Write surveys rather than new technical contributions, ask questions rather than answering them, and make many minor variations of previous works instead of few major contributions. The 2020s will hopefully be the decade where we find better metrics of scientific excellence that encourage a sounder scientific methodology.

Open science can be a solution

The open science movement is pushing for open access to publications, data, simulation code, and research methodology. New steps in this direction are taken every year, with the pressure coming from funding agencies that gradually require a higher degree of openness. The current situation is much different from ten years ago, when I started my journey towards publishing simulation code and data along with my papers. Unfortunately, open science is currently being implemented in a way that risk to degrade the scientific quality. For example, it is great that IEEE is creating new open journals and encourages publication of data and code, but it is bad that the rigor of the peer-review process is challenged by the shorter deadlines in these new journals. One cannot expect researchers, young or experienced, to carefully review a full-length paper in only one or two weeks, as these new journals are requiring. I am not saying that all papers published in those journals are bad (I have published several ones myself) but the quality variations might be almost as large as for unpublished preprints.

During the 2020s, we need to find ways to promote research that are deep and insightful rather than quick and dirty. We need to encourage researchers to take on the important but complicated scientific problems in communication engineering instead of analytically “tractable” problems of little practical relevance. The traditional review process is far from perfect, but it has served us well in upholding the quality of analytical results in published papers. The quality of numerical and experimental results is harder to validate by a referee. Making code and data openly available is not the solution to this problem but a necessary tool in any such solution. We need to encourage researchers to scrutinize and make improvements to each others’ code, as well as basing new research on existing data.

The machine-learning community is leading the way towards a more rigorous treatment of numerical experiments by establishing large common datasets that are used to quantitatively evaluate new algorithms against previous ones. A trend towards similar practices can already be seen in communication engineering, where qualitative comparisons with unknown generalizability have been the norm in the past. Another positive trend is that peer-reviewers have started to be cautious when reference lists contain a large number of preprints, because it is by repeating claims from unpublished papers that myths are occasionally created and spread without control.

I have four New Year’s wishes for 2021:

- The research community focuses on basic research, such as developing and certifying fundamentals models, assumptions, and algorithms related to the different pieces in the existing 6G visions.

- It becomes the norm that code and data are published alongside articles in all reputable scientific journals, or at least submitted for review.

- Preprints are not treated as publications and only cited in the expectational case when the papers are closely related.

- We get past the COVID-19 pandemic and get to meet at physical conferences again.