The user terminals in reciprocity-based Massive MIMO transmit two types of uplink signals: data and pilots (a.k.a. reference signals). A terminal can potentially transmit these signals using different power levels. In the book Fundamentals of Massive MIMO, the pilots are always sent with maximum power, while the data is sent with a user-specific power level that is optimized to deliver a certain per-user performance. In the book Massive MIMO networks, the uplink power levels are also optimized, but under another assumption: each user must assign the same power to pilots and data.

Moreover, there is a series of research papers (e.g., Ref1, Ref2, Ref3) that treat the pilot and data powers as two separate optimization variables that can be optimized with respect to some performance metric, under a constraint on the total energy budget per transmission/coherence block. This gives the flexibility to “move” power from data to pilots for users at the cell edge, to improve the channel state information that the base station acquires and thereby the array gain that it obtains when decoding the data signals received over the antennas.

In some cases, it is theoretically preferable to assign, for example, 20 dB higher power to pilots than to data. But does that make practical sense, bearing in mind that non-linear amplifiers are used and the peak-to-average-power ratio (PAPR) is then a concern? The answer depends on how the pilots and data are allocated over the time-frequency grid. In OFDM systems, which have an inherently high PAPR, it is discouraged to have large power differences between OFDM symbols (i.e., consecutive symbols in the time domain) since this will further increase the PAPR. However, it is perfectly fine to assign the power in an unequal manner over the subcarriers.

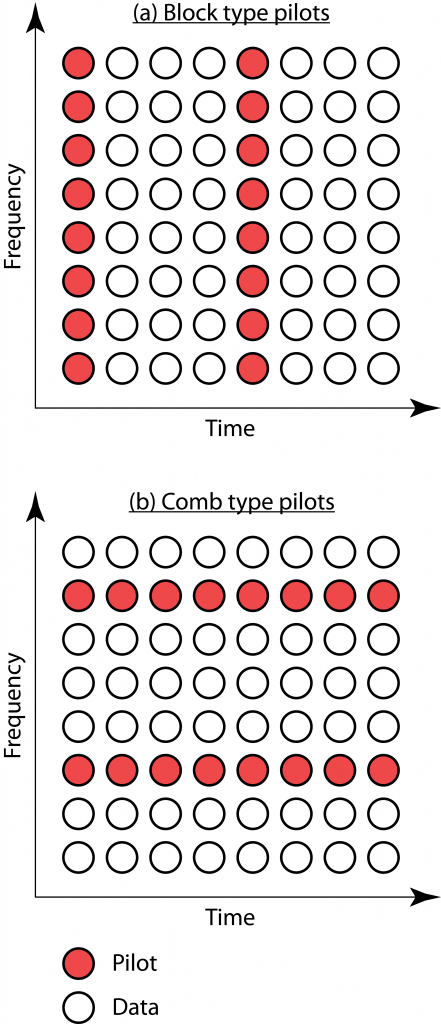

In the OFDM literature, there are two elementary ways to allocate pilots: block and comb type arrangements. These are illustrated in the figure below and some early references on the topic are Ref4, Ref5, Ref6.

(a): In the block type arrangement, at a given OFDM symbol time, all subcarriers either contain pilots or data. It is then preferable for a user terminal to use the same transmit power for pilots and data, to not get a prohibitively high PAPR. This is consistent with the assumptions made in the book Massive MIMO networks.

(b): In the comb type arrangement, some subcarriers always contain pilots and other subcarriers always contain data. It is then possible to assign different power to pilots and data at a user terminal. The power can be moved from pilot subcarriers to data subcarriers or vice versa, without a major impact on the PAPR. This approach enables the type of unequal pilot and data power allocations considered in Fundamentals of Massive MIMO or research papers that optimize the pilot and data powers under a total energy budget per coherence block.

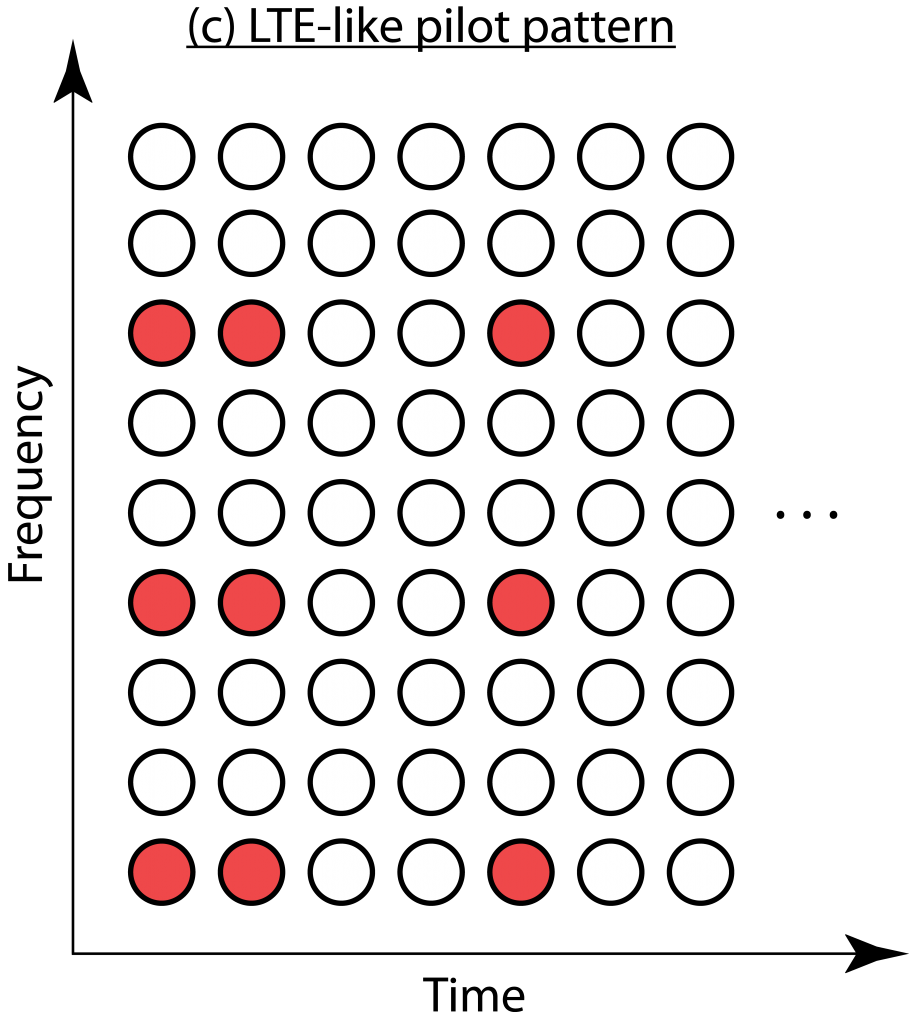

The downlink in LTE uses a variation of the two elementary pilot arrangements, as illustrated in (c). It is easiest described as a comb type arrangement where some pilots are omitted and replaced with data. The number of omitted pilots depend on how many antenna ports are used; the more antennas, the more similar the pilot arrangement becomes to the comb type. Hence, unequal pilot and power allocation is possible in LTE but maybe not as easy to implement as described above. 5G has a more flexible frame structure but supports the same arrangements as LTE.

The downlink in LTE uses a variation of the two elementary pilot arrangements, as illustrated in (c). It is easiest described as a comb type arrangement where some pilots are omitted and replaced with data. The number of omitted pilots depend on how many antenna ports are used; the more antennas, the more similar the pilot arrangement becomes to the comb type. Hence, unequal pilot and power allocation is possible in LTE but maybe not as easy to implement as described above. 5G has a more flexible frame structure but supports the same arrangements as LTE.

In summary, uplink pilots and data can be transmitted at different power levels, and this flexibility can be utilized to improve the performance in Massive MIMO. It does, however, require that the pilots are arranged in practically suitable ways, such as the comb type arrangement.

Pilot contamination used to be seen as the key issue with the Massive MIMO technology, but thanks to a large number of scientific papers we now know fairly well how to deal with it. I outlined the main approaches to mitigate pilot contamination in a

Pilot contamination used to be seen as the key issue with the Massive MIMO technology, but thanks to a large number of scientific papers we now know fairly well how to deal with it. I outlined the main approaches to mitigate pilot contamination in a  LTE was designed to work equally well in

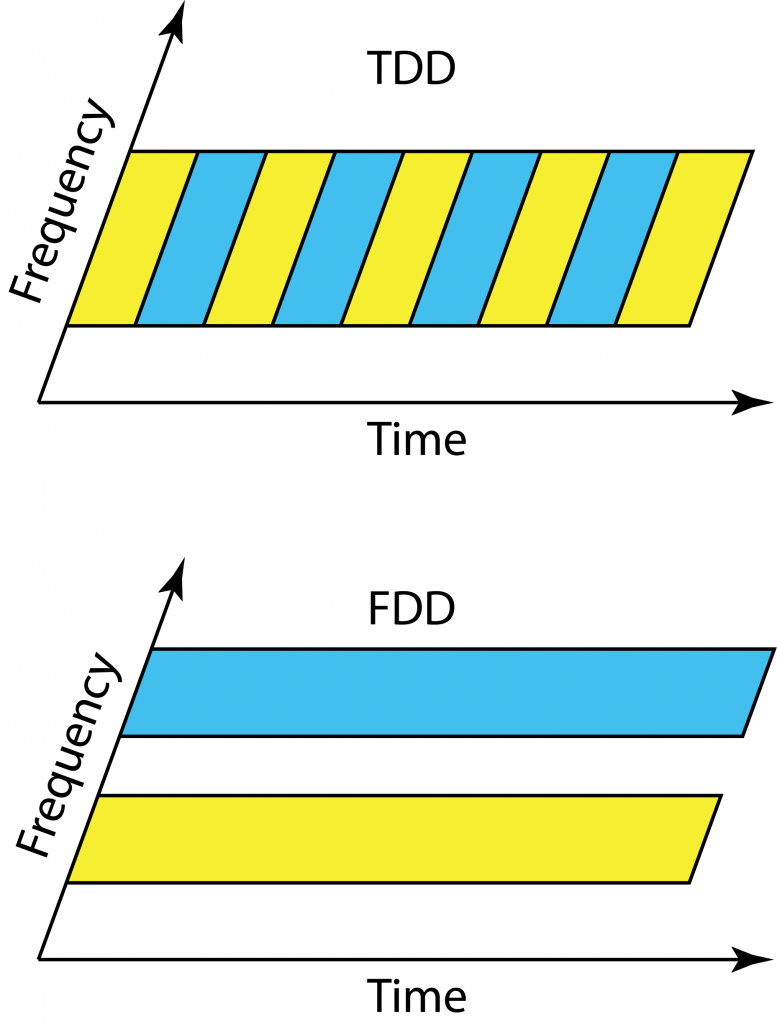

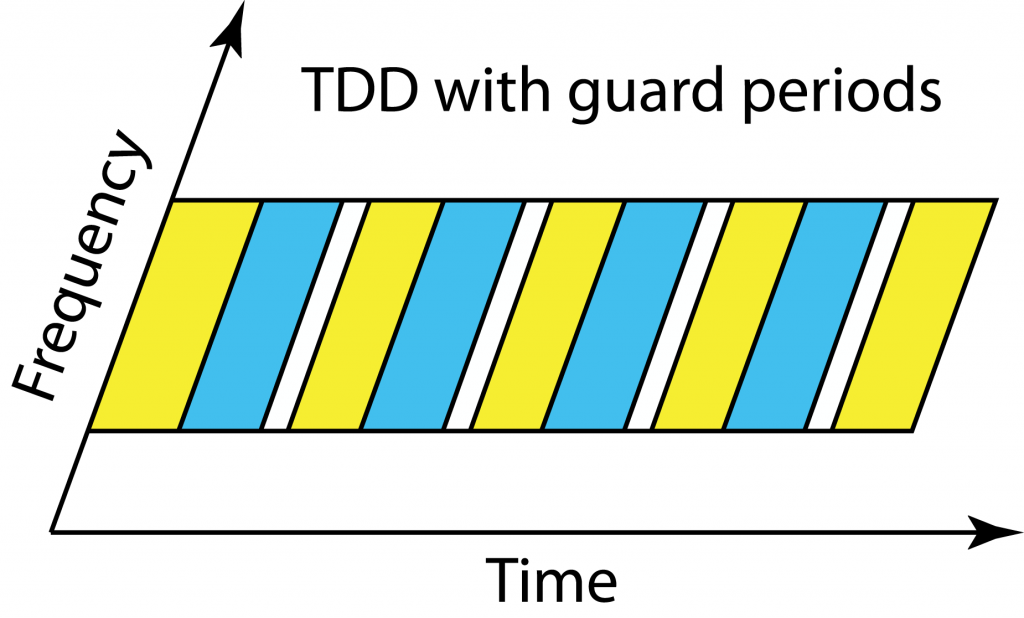

LTE was designed to work equally well in  Everyone in the cell should operate in uplink and downlink mode at the same time in TDD. Since the users are at different distances from the base station and have different delay spreads, they will receive the end of the downlink transmission block at different time instances. If a cell center user starts to transmit in the uplink immediately after receiving the full downlink block, then users at the cell edge will receive a combination of the delayed downlink transmission and the cell center users’ uplink transmissions. To avoid such uplink-downlink interference, there is a guard period in TDD so that all users wait with uplink transmission until the outmost users are done with the downlink.

Everyone in the cell should operate in uplink and downlink mode at the same time in TDD. Since the users are at different distances from the base station and have different delay spreads, they will receive the end of the downlink transmission block at different time instances. If a cell center user starts to transmit in the uplink immediately after receiving the full downlink block, then users at the cell edge will receive a combination of the delayed downlink transmission and the cell center users’ uplink transmissions. To avoid such uplink-downlink interference, there is a guard period in TDD so that all users wait with uplink transmission until the outmost users are done with the downlink.