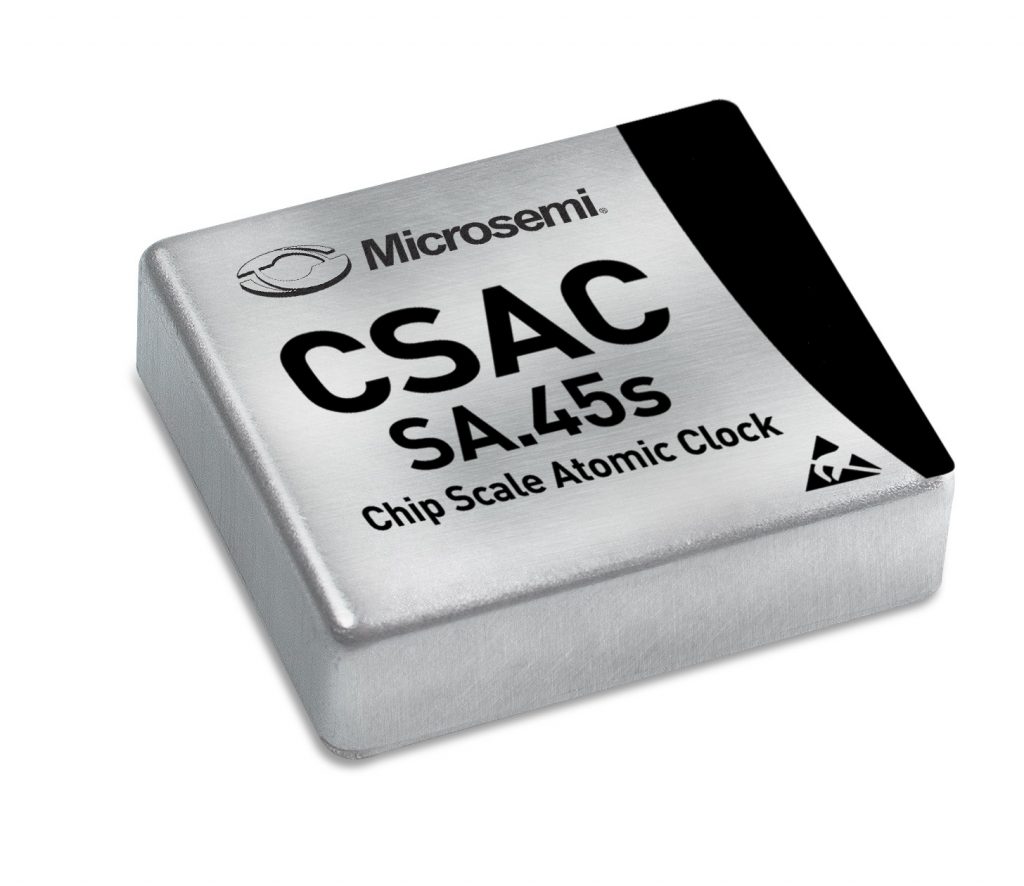

This chip-scale atomic clock (CSAC) device, developed by Microsemi, brings atomic clock timing accuracy (see the specs available in the link) in a volume comparable to a matchbox, and 120 mW power consumption. This is way too much for a handheld gadget, but undoubtedly negligible for any fixed installation powered from the grid. An alternative to synchronization through GNSS that works anywhere, including indoor in GNSS-denied environments.

I haven’t seen a list price, and I don’t know how much exotic metals and what licensing costs that its manufacture requires, but let’s ponder the possibility that a CSAC could be manufactured for the mass-market for a few dollars each. What new applications would then become viable in wireless?

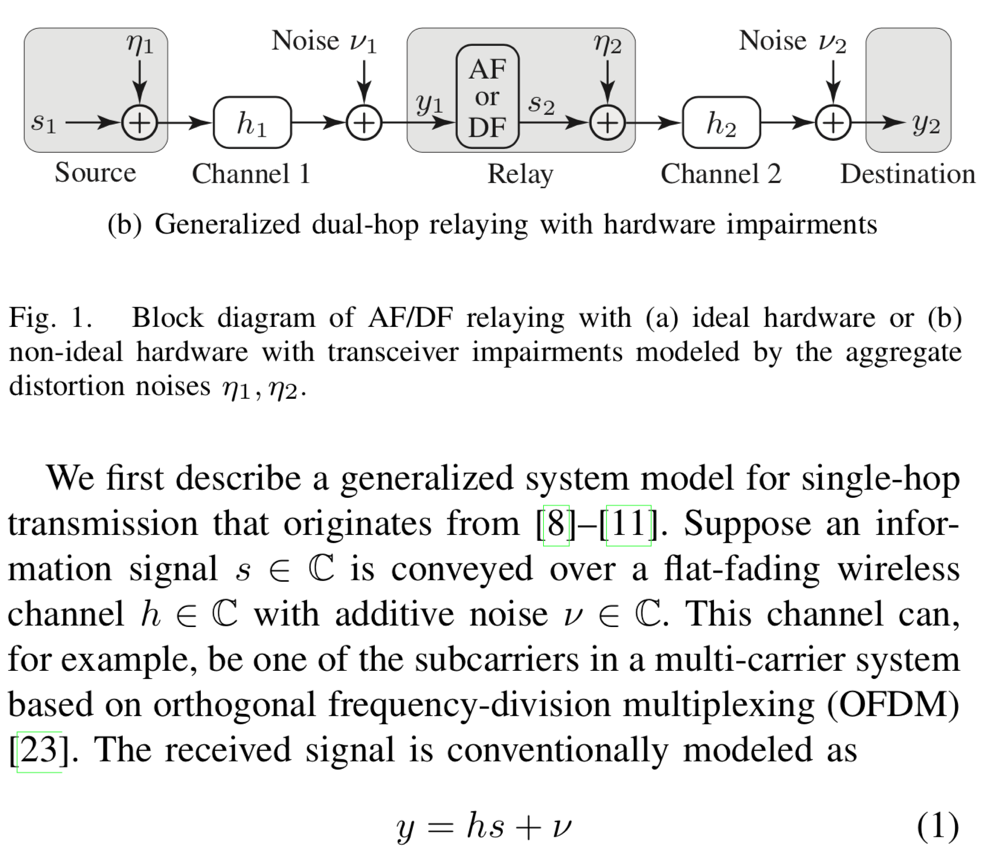

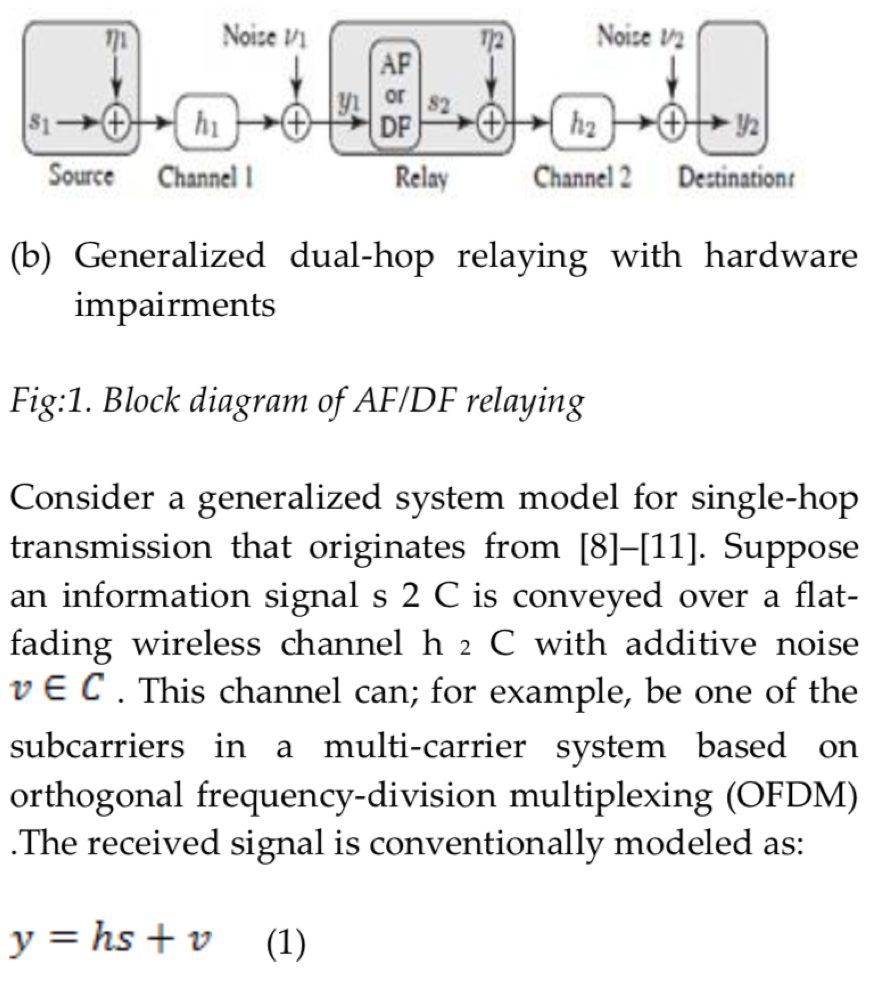

The answer is mostly (or entirely) speculation. One potential application that might become more practical is positioning using distributed arrays. Another is distributed multipair relaying. Here and here are some specific ideas that are communication-theoretically beautiful, and probably powerful, but that seem to be perceived as unrealistic because of synchronization requirements. Perhaps CoMP and distributed MIMO, a.k.a. “cell-free Massive MIMO”, applications could also benefit.

Other applications might be applications for example in IoT, where a device only sporadically transmits information and wants to stay synchronized (perhaps there is no downlink, hence no way of reliably obtaining synchronization information). If a timing offset (or frequency offset for that matter) is unknown but constant over a very long time, it may be treated as a deterministic unknown and estimated. The difficulty with unknown time and frequency offsets is not their existence per se, but the fact that they change quickly over time.

It’s often said (and true) that the “low” speed of light is the main limiting factor in wireless. (Because channel state information is the main limiting factor of wireless communications. If light were faster, then channel coherence would be longer, so acquiring channel state information would be easier.) But maybe the unavailability of a ubiquitous, reliable time reference is another, almost as important, limiting factor. Can CSAC technology change that? I don’t know, but perhaps we ought to take a closer look.