Check out this video, produced by the IEEE Signal Processing Society’s Signal Processing for Communications and Networking (SPCOM) technical committee. The video explains to the layman what 5G is for, and how massive MIMO comes in…

Category Archives: 5G

A case against Massive MIMO?

I had an interesting conversation with a respected colleague who expressed some significant reservations against massive MIMO. Let’s dissect the arguments.

The first argument against massive MIMO was that most traffic is indoors, and that deployment of large arrays indoors is impractical and that outdoor-to-indoor coverage through massive MIMO is undesirable (or technically infeasible). I think the obvious counterargument here is that before anything else, the main selling argument for massive MIMO is not indoor service provision but outdoor macrocell coverage: the ability of TDD/reciprocity based beamforming to handle high mobility, and efficiently suppress interference thus provide cell-edge coverage. (The notion of a “cell-edge” user should be broadly interpreted: anyone having poor nominal signal-to-interference-and-noise ratio, before the MIMO processing kicks in.) But nothing prevents massive MIMO from being installed indoors, if capacity requirements are so high that conventional small cell or WiFi technology cannot handle the load. Antennas could be integrated into walls, ceilings, window panes, furniture or even pieces of art. For construction of new buildings, prefabricated building blocks are often used and antennas could be integrated into these already at their production. Nothing prevents the integration of thousands of antennas into natural objects in a large room.

Outdoor-to-indoor coverage doesn’t work? Importantly, current systems provide outdoor-to-indoor coverage already, and there is no reason Massive MIMO would not do the same (to the contrary, adding base station antennas is always beneficial for performance!). But yet the ideal deployment scenario of massive MIMO is probably not outdoor-to-indoor so this seems like a valid point, partly. The arguments against the outdoor-to-indoor are that modern energy-saving windows have a coating that takes out 20 dB, at least, of the link budget. In addition, small angular spreads when all signals have to pass through windows (maybe except for in wooden buildings) may reduce the rank of the channel so much that not much multiplexing to indoor users is possible. This is mostly speculation and not sure whether experiments are available to confirm, or refute it.

Let’s move on to the second argument. Here the theme is that as systems use larger and larger bandwidths, but can’t increase radiated power, the maximum transmission distance shrinks (because the SNR is inversely proportional to the bandwidth). Hence, the cells have to get smaller and smaller, and eventually, there will be so few users per cell that the aggressive spatial multiplexing on which massive MIMO relies becomes useless – as there is only a single user to multiplex. This argument may be partly valid at least given the traffic situation in current networks. But we do not know what future requirements will be. New applications may change the demand for traffic entirely: augmented or virtual reality, large-scale communication with drones and robots, or other use cases that we cannot even envision today.

It is also not too obvious that with massive MIMO, the larger bandwidths are really required. Spatial multiplexing to 20 terminals improves the bandwidth efficiency 20 times compared to conventional technology. So instead of 20 times more bandwidth, one may use 20 times more antennas. Significant multiplexing gains are not only proven theoretically but have been demonstrated in commercial field trials. It is argued sometimes that traffic is bursty so that these multiplexing gains cannot materialize in practice, but this is partly a misconception and partly a consequence of defect higher-layer designs (most importantly TCP/IP) and vested interests in these flawed designs. For example, for the services that constitute most of the raw bits, especially video streaming, there is no good reason to use TCP/IP at all. Hopefully, once the enormous potential of massive MIMO physical layer technology becomes more widely known and understood, the market forces will push a re-design of higher-layer and application protocols so that they can maximally benefit from the massive MIMO physical layer. Does this entail a complete re-design of the Internet? No, probably not, but buffers have to be installed and parts of the link layer should be revamped to maximally use the “wires in the air”, ideally suited for aggressive multiplexing of circuit-switched data, that massive MIMO offers.

When Will Hybrid Beamforming Disappear?

There has been a lot of fuss about hybrid analog-digital beamforming in the development of 5G. Strangely, it is not because of this technology’s merits but rather due to general disbelief in the telecom industry’s ability to build fully digital transceivers in frequency bands above 6 GHz. I find this rather odd; we are living in a society that becomes increasingly digitalized, with everything changing from being analog to digital. Why would the wireless technology suddenly move in the opposite direction?

When Marzetta published his seminal Massive MIMO paper in 2010, the idea of having an array with a hundred or more fully digital antennas was considered science fiction, or at least prohibitively costly and power consuming. Today, we know that Massive MIMO is actually a pre-5G technology, with 64-antenna systems already deployed in LTE systems operating below 6 GHz. These antenna panels are very commercially competitive; 95% of the base stations that Huawei are currently selling have at least 32 antennas. The fast technological development demonstrates that the initial skepticism against Massive MIMO was based on misconceptions rather than fundamental facts.

In the same way, there is nothing fundamental that prevents the development of fully digital transceivers in mmWave bands, but it is only a matter of time before such transceivers are developed and will dominate the market. With digital beamforming, we can get rid of the complicated beam-searching and beam-tracking algorithms that have been developed over the past five years and achieve a simpler and more reliable system operation, particularly, using TDD operation and reciprocity-based beamforming.

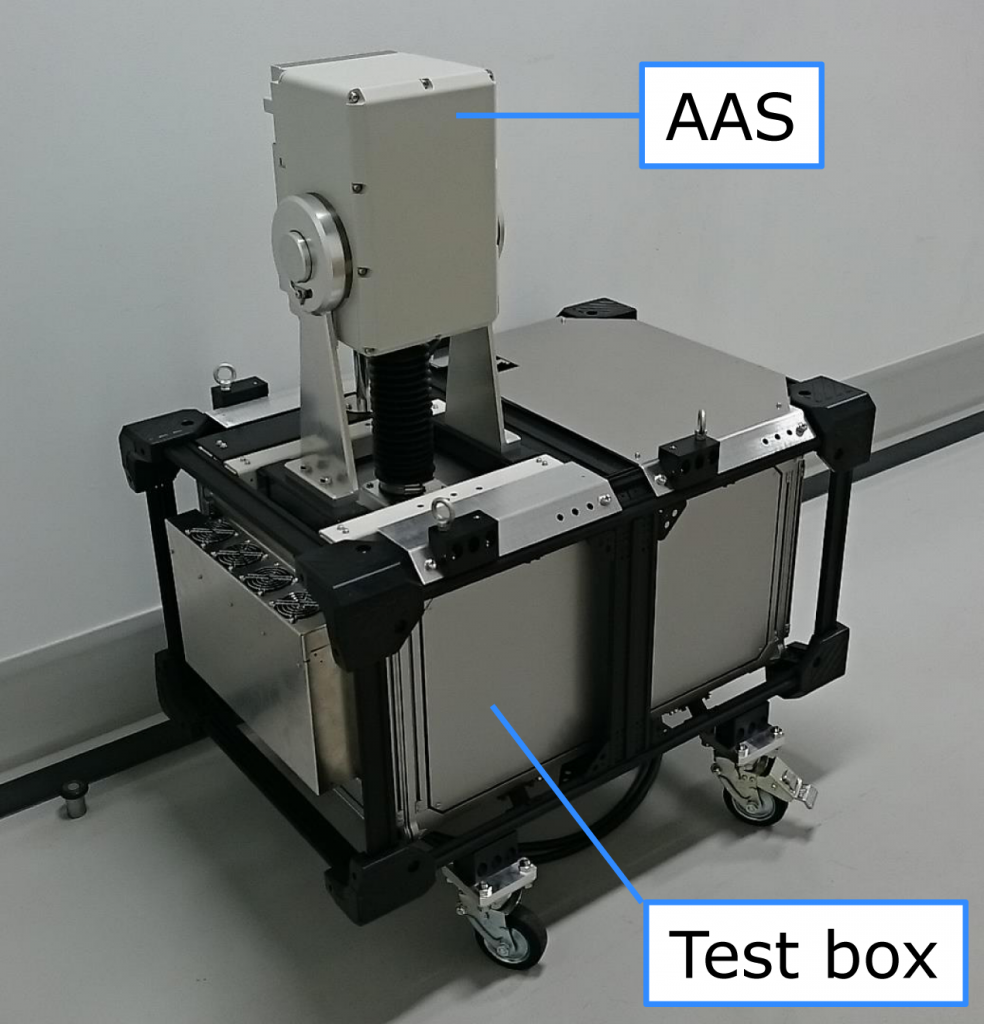

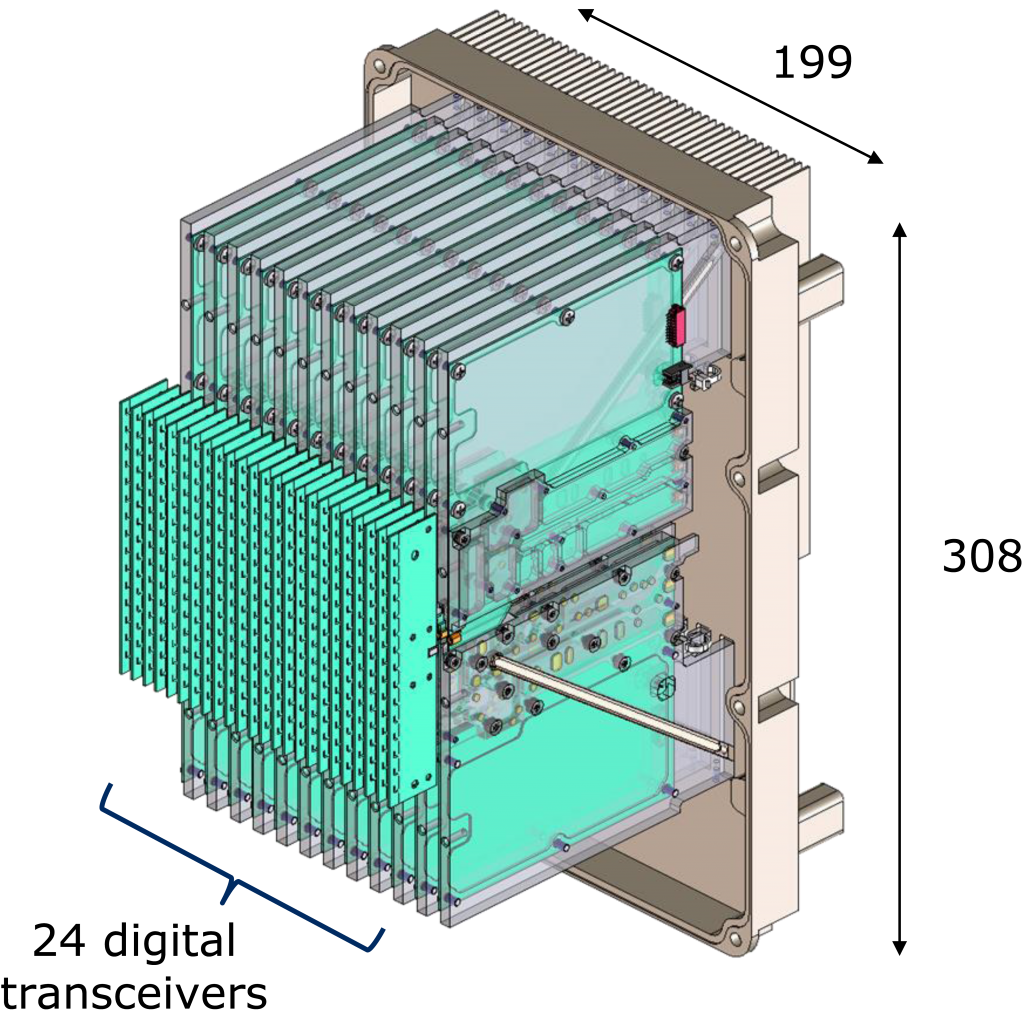

I didn’t jump onto the hybrid beamforming research train since it already had many passengers and I thought that this research topic would become irrelevant after 5-10 years. But I was wrong – it now seems that the digital solutions will be released much earlier than I thought. At the 2018 European Microwave Conference, NEC Cooperation presented an experimental verification of an active antenna system (AAS) for the 28 GHz band with 24 fully digital transceiver chains. The design is modular and consists of 24 horizontally stacked antennas, which means that the same design could be used for even larger arrays.

Tomoya Kaneko, Chief Advanced Technologist for RF Technologies Development at NEC, told me that they target to release a fully digital AAS in just a few years. So maybe hybrid analog-digital beamforming will be replaced by digital beamforming already in the beginning of the 5G mmWave deployments?

That said, I think that the hybrid beamforming algorithms will have new roles to play in the future. The first generations of new communication systems might reach faster to the market by using a hybrid analog-digital architecture, which require hybrid beamforming, than waiting for the fully digital implementation to be finalized. This could, for example, be the case for holographic beamforming or MIMO systems operating in the sub-THz bands. There will also remain to exist non-mobile point-to-point communication systems with line-of-sight channels (e.g., satellite communications) where analog solutions are quite enough to achieve all the necessary performance gains that MIMO can provide.

The Role of Massive MIMO in 5G Deployments

The support for mmWave spectrum is a key feature of 5G, but mmWave communication is also known to be inherently unreliable due to the blockage and penetration losses, as can be demonstrated in this simple way:

This is why the sub-6 GHz bands will continue to be the backbone of the future 5G networks, just as in previous cellular generations, while mmWave bands will define the best-case performance. A clear example of this is the 5G deployment strategy of the US operator Sprint, which I heard about in a keynote by John Saw, CTO at Sprint, at the Brooklyn 5G Summit. (Here is a video of the keynote.)

Sprint will use spectrum in the 600 MHz band to achieve wide-spread 5G coverage. This low frequency will enable spatial multiplexing of many users if Massive MIMO is used, but the data rates per user will be rather limited since only a few tens of MHz of bandwidth is available. Nevertheless, this band will define the guaranteed service level of the 5G network.

In addition, Sprint has 120 MHz of TDD spectrum in the 2.5 GHz band and are deploying 64-antenna Massive MIMO base stations in many major cities; there will be more than 1000 sites in 2019. These can both be used to simultaneously do spatial multiplexing of many users and to improve the per-user data rates thanks to the beamforming gains. John Saw pointed out that the word “massive” in Massive MIMO sounds scary, but the actual arrays are neat and compact in the 2.5 GHz band. He also explained that this frequency band supports high mobility, which is very challenging at mmWave frequencies. The mobility support is demonstrated in the following video:

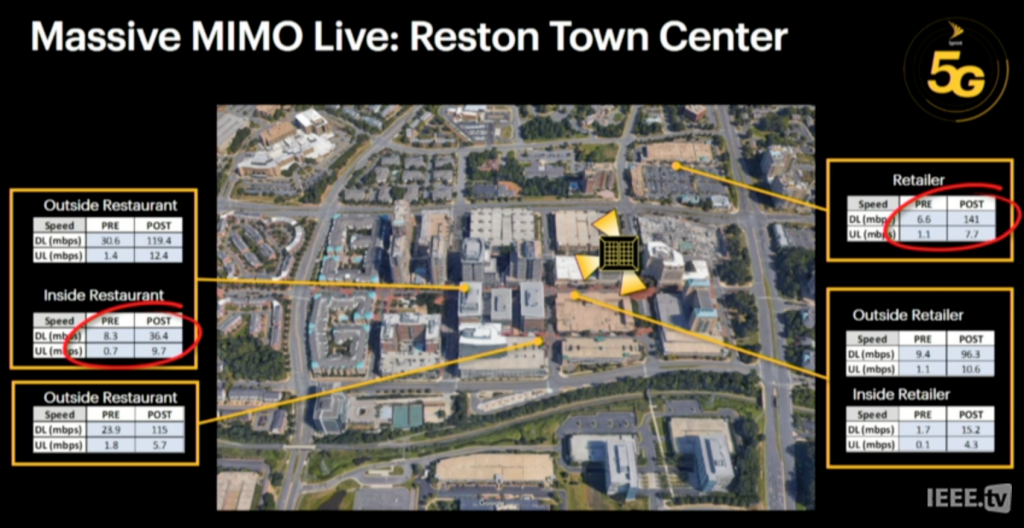

The initial tests of Sprint’s Massive MIMO systems pretty much confirm the theoretical predictions. In Plano, Texas, a 3.4x gain in downlink sum rates and 8.9x gain in uplink sum rates were observed when comparing 64-antenna and 8-antenna panels. These gains come from a combination of spatial multiplexing and beamforming; this is particularly evident in the uplink where the rates increased faster than the number of antennas. Recent measurements at the Reston Town Center, Virginia, showed similar gains: between 4x and 20x improvements at different locations (see the image below).

Tom Marzetta, the originator of Massive MIMO, attended the keynote and gave me the following comment: “It is gratifying to hear the CTO of Sprint confirm, through actual commercial deployments, what the advocates of Massive MIMO have said for so long.”

Interestingly, Sprint noticed that their customers immediately used more data when Massive MIMO was turned on, and there were more simultaneous users in the network. This demonstrates the fact that whenever you create a more capable cellular network, the users will be encouraged to use more data and new use cases will gradually appear. This is why we need to continue looking for ways to improve the spectral efficiency beyond 5G and Massive MIMO.

Quantifying the Benefits of 64T64R Massive MIMO

Came across this study, which seems interesting: Data from the Sprint LTE TDD network, comparing performance side-by-side of 64T64R and 8T8R antenna systems.

From the results:

“We observed up to a 3.4x increase in downlink sector throughput and up to an 8.9x increase in the uplink sector throughput versus 8T8R (obviously the gain is substantially higher relative to 2T2R). Results varied based on the test conditions that we identified. Link budget tests revealed close to a triple-digit improvement in uplink data speeds. Preliminary results for the downlink also showed strong gains. Future improvements in 64T64R are forthcoming based on likely vendor product roadmaps.”

Is It Time to Forget About Antenna Selection?

Channel fading has always been a limiting factor in wireless communications, which is why various diversity schemes have been developed to combat fading (and other channel impairments). The basic idea is to obtain many “independent” observations of the channel and exploit that it is unlikely that all of these observations are subject to deep fade in parallel. These observations can be obtained over time, frequency, space, polarization, etc.

Antenna selection is the basic form of space diversity. Suppose a base station (BS) equipped with multiple antennas applies antenna selection. In the uplink, the BS only uses the antenna that currently gives the highest signal-to-interference-and-noise ratio (SINR). In the downlink, the BS only transmits from the antenna that currently has the highest SINR. As the user moves around, the fading changes and we, therefore, need to reselect which antenna to use.

The term antenna selection diversity can be traced back to the 1980s, but this diversity scheme was analyzed already in the 1950s. One well-cited paper from that time is Linear Diversity Combining Techniques by D. G. Brennan. This paper demonstrates mathematically and numerically that selection diversity is suboptimal, while the scheme called maximum-ratio combining (MRC) always provides higher SINR. Hence, instead of only selecting one antenna, it is preferable for the BS to coherently combine the signals from/to all the antennas to maximize the SINR. When the MRC scheme is applied in Massive MIMO with a very large number of antennas, we often talk about channel hardening but this is nothing but an extreme form of space diversity that almost entirely removes the fading effect.

Even if the suboptimality of selection diversity has been known for 60 years, the antenna selection concept has continued to be analyzed in the MIMO literature and recently also in the context of Massive MIMO. Many recent papers are considering a generalization of the scheme that is known as antenna subset selection, where a subset of the antennas is selected and then MRC is applied using only these ones.

Why use antenna selection?

A common motivation for using antenna selection is that it would be too expensive to equip every antenna with a dedicated transceiver chain in Massive MIMO, therefore we need to sacrifice some of the performance to achieve a feasible implementation. This is a misleading motivation since Massive MIMO capable base stations have already been developed and commercially deployed. I think a better motivation would be that we can save power by only using a subset of the antennas at a time, particularly, when the traffic load is below the maximum system capacity so we don’t need to compromise with the users’ throughput.

The recent papers [1], [2], [3] on the topic consider narrowband MIMO channels. In contrast, Massive MIMO will in practice be used in wideband systems where the channel fading is different between subcarriers. That means that one antenna will give the highest SINR on one subcarrier, while another antenna will give the highest SINR on another subcarrier. If we apply the antenna selection principle on a per-subcarrier basis in a wideband OFDM system with thousands of subcarriers, we will probably use all the antennas on at least one of the subcarrier. Consequently, we cannot turn off any of the antennas and the power saving benefits are lost.

We can instead apply the antenna selection scheme based on the average received power over all the subcarriers, but most channel models assume that this average power is the same for every base station antenna (this applies to both i.i.d. fading and correlated fading models, such as the one-ring model). That means that if we want to turn off antennas, we can select them randomly since all random selections will be (almost) equally good, and there are no selection diversity gains to be harvested.

This is why we can forget about antenna selection diversity in Massive MIMO!

It is only when the average channel gain is different among the antennas that antenna subset selection diversity might have a role to play. In that case, the antenna selection is governed by variations in the large-scale fading instead of variations in the small-scale fading, as conventionally assumed. This paper takes a step in that direction. I think this is the only case of antenna (subset) selection that might deserve further attention, while in general, it is a concept that can be forgotten.

Commercial 5G Networks

Some of the first 5G phones were announced at the Mobile World Congress last week. Many of these phones are reportedly based on the Snapdragon 855 Mobile Platform from Qualcomm, which supports 5G with up to 100 MHz bandwidth in sub-6 GHz bands and up to 800 MHz bandwidth in mmWave bands.

Despite all the fuss about mmWave being the key feature of 5G, it appears that the first commercial networks will utilize conventional sub-6 GHz bands; for example, Sprint will launch 5G using the 2.5 GHz band in nine major US cities from May to June 2019. Sprint is using Massive MIMO panels from Ericsson, Nokia, and Samsung. The reason to use the 2.5 GHz band is to achieve a reasonably wide network coverage with a limited number of base stations. The new Massive MIMO base stations will initially be used for both 4G and 5G. The following video details Sprint’s preparations for their 5G launch:

Another interesting piece of news from the Mobile World Congress is that 95% of the base stations that Huawei is currently shipping contain Massive MIMO with either 32 or 64 antennas.